Chapter 3 Shor’s Semidefinite Relaxation

In this Chapter, we introduce one of the most important and well-known applications of semidefinite optimization, namely its use in the formulation of convex relaxations of nonconvex optimization problems.

We will focus on the so-called Shor’s semidefinite relaxation (Shor 1987), which is particularly designed for quadratically constrained quadratic programs (QCQPs). Shor’s semidefinite relaxation is relatively easy to formulate and understand, and as we will see later, is essentially the first-order relaxation in the moment-SOS hierarchy.

3.1 Semidefinite Relaxation of QCQPs

Consider a quadratically constrained quadratic program (QCQP): \[\begin{equation} \begin{split} f^\star = \min_{x \in \mathbb{R}^{n}} & \quad x^\top C x \\ \mathrm{s.t.}& \quad x^\top A_i x = b_i, i=1,\dots,m \end{split} \tag{3.1} \end{equation}\] where \(C,A_1,\dots,A_m \in \mathbb{S}^{n}\) are given symmetric matrices and \(b = [b_1,\dots,b_m] \in \mathbb{R}^{m}\) is a given vector. We assume the problem (3.1) is feasible, solvable, and bounded from below, i.e., \(-\infty < f^\star < +\infty\) and is attained. Many practical problems in optimization, engineering, and applied sciences can be formulated as (3.1). For example, problem (3.1) includes binary quadratic optimization (BQP) problems by letting \[ A_i = e_i e_i^\top, i=1,\dots,n \] with \(e_i \in \mathbb{R}^{n}\) the standard Euclidean basis vector, in which case the \(i\)-th constraint becomes \[ x_i^2 = 1 \Leftrightarrow x_i \in \{+1,-1\},i=1,\dots,n. \] We will discuss a particular type of BQP known as the MAXCUT problem in more details later. From this simple example, since QCQP includes BQP, we know in general the problem (3.1) is nonconvex and it is NP-hard to compute \(f^\star\) and find a global optimizer.

3.1.1 Lagrangian Dual Problem

Since the original QCQP is hard to solve in general, we turn to computing a lower bound of (3.1). The most natural way to find a lower bound, according to Section 1.3.2, is to derive its Lagrangian dual problem. Towards this goal, we associate a Lagrangian multipler \(-y_i\) with each equality constraint and obtain the Lagrangian \[ L(x,y) = x^\top C x - \sum_{i=1}^m y_i (x^\top A_i x - b_i), \quad x \in \mathbb{R}^{n},y \in \mathbb{R}^{m}. \] The Lagrangian dual, by definition, is \[ \phi(y) = \min_x L(x,y) = \sum_{i=1}^m y_i b_i + \min_{x \in \mathbb{R}^{n}} x^\top\underbrace{\left( C - \sum_{i=1}^m y_i A_i \right)}_{Z} x. \] Clearly, if \(Z\) is positive semidefinite, then \(\min_x x^\top Z x = 0\) (by choosing \(x=0\)); otherwise, \(\min_x x^\top Z x = -\infty\). Therefore, the dual function is \[ \phi(y) = \begin{cases} \sum_{i=1}^m y_i b_i & \text{if } C - \sum_{i=1}^m y_i A_i \succeq 0 \\ - \infty & \text{otherwise} \end{cases}. \] The Lagrangian dual problem seeks to maximize \(y\), and hence it will make sure \(Z\) is PSD \[\begin{equation} \begin{split} d^\star = \max_{y \in \mathbb{R}^{m}} & \quad b^\top y \\ \mathrm{s.t.}& \quad C - \mathcal{A}^*(y) \succeq 0. \end{split} \tag{3.2} \end{equation}\] By Lagrangian duality, we have \[ d^\star \leq f^\star. \] Note that problem (3.2) is a convex SDP and can be solved by off-the-shelf solvers.

Another nice property of the dual problem is that it naturally leads to a certifier.

Proposition 3.1 (Dual Optimality Certifier) Let \(x_\star\) be a feasible solution to the QCQP (3.1), if there exists \(y_\star \in \mathbb{R}^{m}\) such that \[\begin{equation} C - \mathcal{A}^*(y_\star) \succeq 0, \quad [ C - \mathcal{A}^*(y_\star) ] x_\star = 0, \tag{3.3} \end{equation}\] then \(x_\star\) is a global minimizer of (3.1), and \(y_\star\) is a global maximizer of (3.2).

Proof. We have zero duality gap \[\begin{equation} \begin{split} x_\star^\top C x_\star - b^\top y_\star = \langle C, x_\star x_\star^\top \rangle - \langle b, y_\star \rangle = \langle C, x_\star x_\star^\top \rangle - \langle \mathcal{A}(x_\star x_\star^\top), y_\star \rangle \\ = \langle C - \mathcal{A}^*(y_\star), x_\star x_\star^\top \rangle = x_\star^\top[ C - \mathcal{A}^*(y_\star) ] x_\star = 0. \end{split} \end{equation}\] and \((x_\star, y_\star)\) is primal-dual feasible. Therefore, \((x_\star, y_\star)\) is primal-dual optimal.

The reason why Proposition 3.1 can be quite useful is that it gives a very efficient algorithm to certify global optimality of a candidate (potentially locally) optimal solution \(x_\star\). In particular, in several practical applications in computer vision and robotics, the second equation in (3.3) is a linear system of \(n\) equations in \(m\) variables with \(m\leq n\), and hence it has a unique solution. Therefore, one can first solve the linear system and obtain a candidate \(y_\star\), and then simply check the PSD condition \(C - \mathcal{A}^*(y_\star) \succeq 0\). This leads to optimality certifiers that can run in real time (Garcia-Salguero, Briales, and Gonzalez-Jimenez 2021) (Holmes and Barfoot 2023).

3.1.2 Dual of the Dual (Bidual)

The dual problem (3.2) should appear familiar to us at this moment – it is simply a standard-form dual SDP (2.6). Therefore, we can write down the SDP dual of the QCQP dual (3.2) \[\begin{equation} \begin{split} p^\star = \min_{X \in \mathbb{S}^{n}} & \quad \langle C, X \rangle \\ \mathrm{s.t.}& \quad \mathcal{A}(X) = b \\ & \quad X \succeq 0 \end{split} \tag{3.4} \end{equation}\] Under the assumption of SDP strong duality (e.g., both (3.4) and (3.2) are strictly feasible), we have \[ p^\star = d^\star \leq f^\star. \] The weak duality \(d^\star \leq f^\star\) can be interpreted using standard Lagrangian duality. How about the weak duality \(p^\star \leq f^\star\)? It turns out the dual of the dual (bidual) also has a nice interpretation.

We first observe that the original QCQP (3.1) is equivalent to a rank-constrained matrix optimization problem.

Proposition 3.2 (Rank-Constrained Matrix Optimization) The QCQP (3.1) is equivalent to the following rank-constrained matrix optimization \[\begin{equation} \begin{split} f^\star_m = \min_{X \in \mathbb{S}^{n}} & \quad \langle C, X \rangle \\ \mathrm{s.t.}& \quad \mathcal{A}(X) = b \\ & \quad X \succeq 0 \\ & \quad \mathrm{rank}(X) = 1 \end{split} \tag{3.5} \end{equation}\] in the sense that

\(f^\star = f^\star_m\)

For every optimal solution \(x_\star\) of the QCQP (3.1), \(X_\star = x_\star x_\star^\top\) is globally optimal for the matrix optimization (3.5)

Every optimal solution \(X_\star\) of the matrix optimization can be factorized as \(X_\star = x_\star x_\star^\top\) so that \(x_\star\) is optimal for the QCQP (3.1).

Proof. We will show that the feasible set of the QCQP (3.1) is the same as the feasible set of (3.5).

Let \(x\) be feasible for the QCQP (3.1), we have \(X=xx^\top\) must be feasible for (3.5) because \(X \succeq 0\) by construction, \(\mathrm{rank}(X) = \mathrm{rank}(xx^\top) = \mathrm{rank}(x) = 1\), and \[ x^\top A_i x = b_i \Rightarrow \mathrm{tr}(x^\top A_i x) = b_i \Rightarrow \mathrm{tr}(A_i x x^\top) = b_i \Rightarrow \langle A_i, X \rangle = b_i, \forall i. \]

Conversely, let \(X\) be feasible for the matrix optimization (3.5). Since \(X \succeq 0\) and \(\mathrm{rank}(X) = 1\), \(X = xx^\top\) must hold for some \(x \in \mathbb{R}^{n}\). In the meanwhile, \[ \langle A_i, X \rangle = b_i \Rightarrow \langle A_i, xx^\top \rangle = b_i \Rightarrow x^\top A_i x = b_i, \forall i. \] Therefore, \(x\) is feasible for the QCQP (3.1).

Finally, it is easy to observer that \[ \langle C, X \rangle = \langle C, xx^\top \rangle = x^\top C x, \] and the objective is also the same.

Since the QCQP is equivalent to the matrix optimization (3.5), one should expect the matrix optimization to be NP-hard in general as well. In fact, this is true due to the nonconvex rank constraint \(\mathrm{rank}(X) = 1\). Comparing the nonconvex SDP (3.5) and the convex SDP (3.4), we see the only difference is that we have dropped the nonconvex rank constraint in (3.4) to make it convex, hence the SDP (3.4) is a convex relaxation of the QCQP (3.1) and \(p^\star \leq f^\star\).

This convex relaxation perspective also provides a way to certify global optimality, by checking the rank of the optimal solution after solving the convex SDP (3.4).

Proposition 3.3 (Exactness of SDP Relaxation) Let \(X_\star\) be an optimal solution to the SDP (3.4), if \(\mathrm{rank}(X_\star) = 1\), then \(X_\star\) can be factorized as \(X_\star = x_\star x_\star^\top\) with \(x_\star\) a globally optimal solution to the QCQP (3.1). If so, we say the relaxation (3.4) is exact, or tight.

It is worth noting that, even when the relaxation is exact, i.e., \(p^\star = f^\star\), it may not be trivial to numerically certify the exactness, due to several reasons

If the SDP (3.4) is solved using interior point methods such as MOSEK, then it is well known that they converge to the maximum-rank solution (Wolkowicz, Saigal, and Vandenberghe 2000). This is saying that even if the SDP (3.4) has rank-one solutions, the solvers may not find them. Consider the case where \(x_1\) and \(x_2\) are both optimal solutions to the QCQP (3.1) and the relaxation is exact, it is easy to check that \[ X = \lambda_1 x_1 x_1^\top+ \lambda_2 x_2 x_2^\top, \quad \lambda_1, \lambda_2 \geq 0, \lambda_1 + \lambda_2 = 1 \] is globally optimal for the SDP. When \(x_1 x_1^\top\) and \(x_2 x_2^\top\) are linearly independent, \(X\) can have rank equal to two. In this case, interior point methods will not converge to either \(x_1x_1^\top\) or \(x_2 x_2^\top\), but will instead find \(X\) with some unknown coefficients of \(\lambda_1\) and \(\lambda_2\).

Even if the original QCQP has a unique optimal solution and the relaxation is exact, the SDP solver will converge to a solution that is approximately rank-one (i.e., the second largest sigular value / eigenvalue will still be nonzero) and it may be difficult to draw a conclusion about exactness. Therefore, in practice one can compute a relative suboptimality gap by rounding a feasible point to the QCQP from the SDP (it may or may not be easy to round a feasible point), denoted as \(\hat{x}\), then compute \[ \hat{f} = \hat{x}^\top C \hat{x}, \] which serves as an upper bound \[ p^\star \leq f^\star \leq \hat{f}. \] The relative suboptimality gap can be computed as \[ \eta = \frac{|\hat{f} - p^\star|}{1 + |\hat{f}| + |p^\star|}. \] Clearly, \(\eta \approx 0\) certifies exactness of the SDP relaxation.

3.1.3 MAXCUT

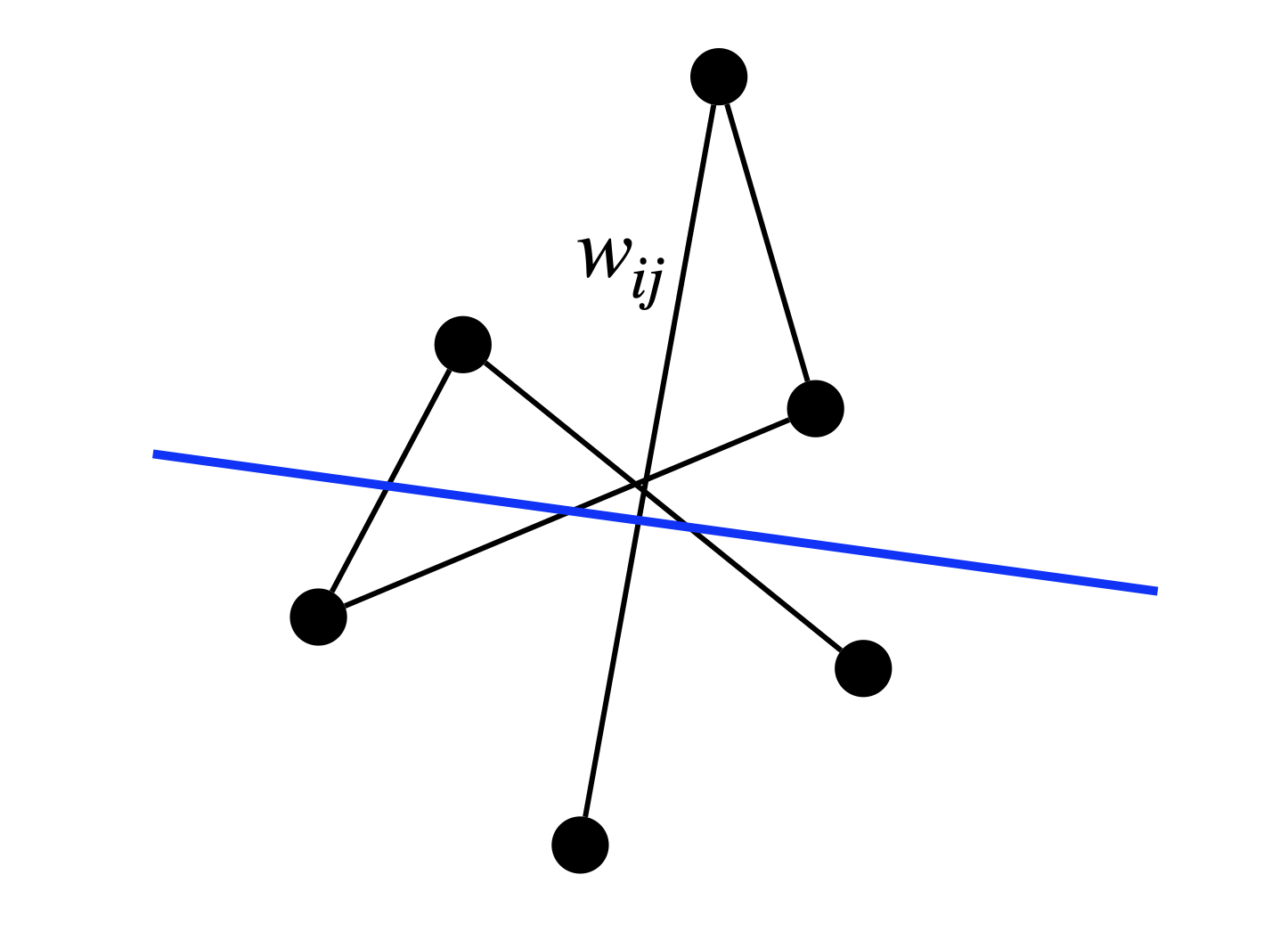

We will now study binary quadratic optimization problems, in particular the MAXCUT problem. The original QCQP reads \[\begin{equation} \begin{split} \min_{x \in \mathbb{R}^{n}} & \quad x^\top C x \\ \mathrm{s.t.}& \quad x_i^2 = 1, i=1,\dots,n. \end{split} \tag{3.6} \end{equation}\] For the MAXCUT problem, a standard formulation is \[\begin{equation} \max_{x_i^2 = 1} \frac{1}{4} \sum_{i,j} w_{ij} (1 - x_i x_j), \tag{3.7} \end{equation}\] where \(w_{ij} \geq 0\) is the weight of the edge between node \(i\) and node \(j\). It is clear that if \(x_i, x_j\) have the same sign, then \(1- x_i x_j = 0\), otherwise, \(1- x_i x_j = 2\).

Figure 3.1: MAXCUT seeks to separate the node set of a graph into two disjoint groups such that the separation line cuts as many (weighted) edges as possible. For example, the blue line cuts four edges.

Removing the constant terms in (3.7), it is equivalent to the followng BQP \[\begin{equation} \min_{x_i^2 = 1} \sum_{i,j} w_{ij} x_i x_j. \tag{3.8} \end{equation}\]

Random Rounding. In general, solving the SDP relaxation of the MAXCUT problem will not produce a certifiably optimal solution. It is therefore interesting to ask if solving the SDP relaxation can produce provably good approximations.

Let \(X\) be the optimal solution of the SDP relaxation, and \(X = V^\top V\) be a rank-\(r\) factorization with \(V \in \mathbb{R}^{r \times n}\) \[ V = [v_1,\dots,v_n] \] and each vector \(v_i \in \mathbb{R}^{r}\). We have \(X_{ij} = v_i^\top v_j\). Since \(X_{ii} = 1\), the vectors \(v_i\)’s lie on the unit sphere. Goemans and Williamson (Goemans and Williamson 1995) proposed to obtain a feasible point to the orignal BQP by first choosing a random unit direction \(p \in \mathbb{R}^{r}\) and then assign \[ x_i = \mathrm{sgn}(p^\top v_i), i=1,\dots,n. \] The expected value of this solution can be written as \[ \mathbb{E}_p \left\{ x^\top C x \right\} = \sum_{i,j} C_{ij} \mathbb{E}_p \left\{ x_i x_j \right\} = \sum_{i,j} C_{ij} \mathbb{E}_p \left\{ \mathrm{sgn}(p^\top x_i) \mathrm{sgn}(p^\top x_j) \right\} . \] This expectation can be computed using geometric intuition. Consider the plane spanned by \(v_i\) and \(v_j\) and let \(\theta_{ij}\) be the angle between them. Then, it is easy to see that the desired expectation is equal to the probability that both points are on the same side of the hyperplane, minus the probability that they are on different sides. These probabilities are \(1- \theta_{ij} / \pi\) and \(\theta_{ij} / \pi\), respectively. Therefore, the expected value of the rounded solution is \[ \sum_{i,j} C_{ij} \left( 1- \frac{2\theta_{ij}}{\pi} \right) = \sum_{i,j} C_{ij} \left( 1- \frac{2}{\pi} \arccos (v_i^\top v_j) \right) = \frac{2}{\pi} \sum_{i,j} C_{ij} \arcsin X_{ij}, \] where we have used \[ \arccos t + \arcsin t = \frac{\pi}{2}. \]

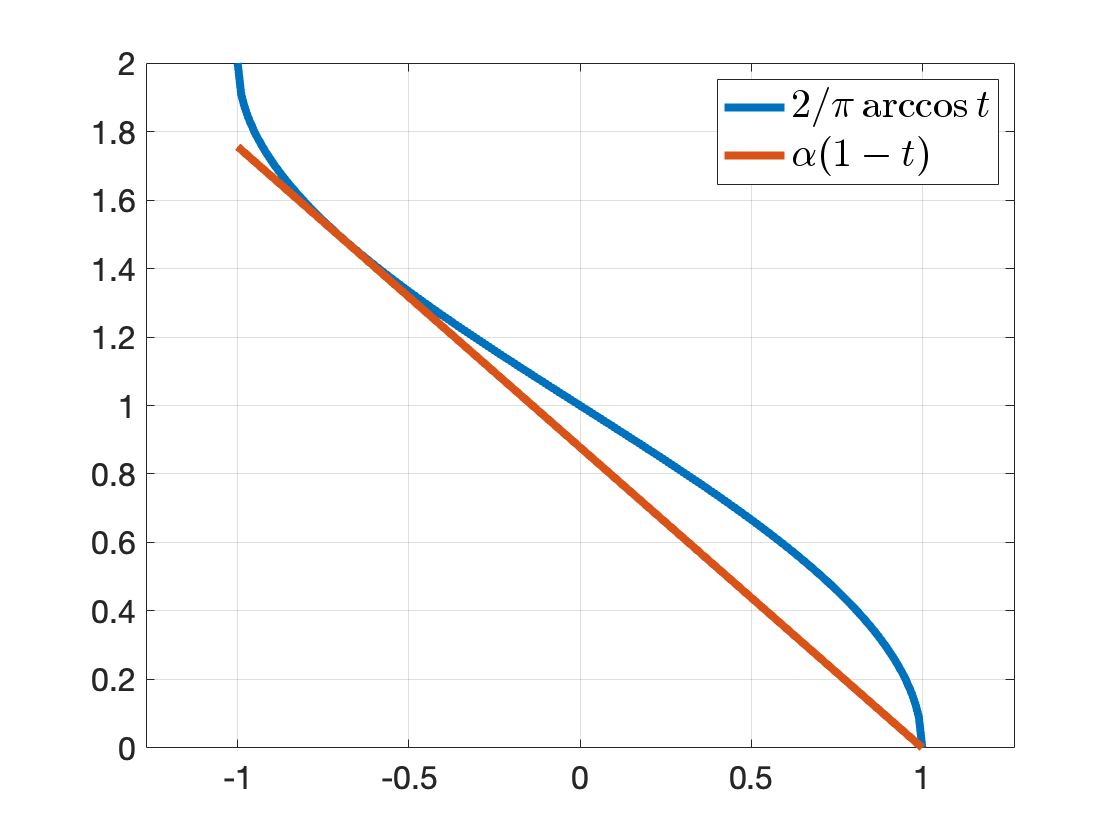

MAXCUT Bound. For the MAXCUT problem, there are constant terms involved in the original cost function, which leads to the expected cut of the rounded solution to be \[ c_{\mathrm{expected}} = \frac{1}{4} \sum_{i,j} w_{ij} \left( 1- \frac{2}{\pi} \arcsin X_{ij} \right) = \frac{1}{4} \frac{2}{\pi} \sum_{ij} w_{ij} \arccos X_{ij}. \] On the other hand, the optimal value of the SDP relaxation produces an upper bound on the true MAXCUT \[ c_{\mathrm{ub}} = \frac{1}{4} \sum_{i,j} w_{ij} (1 - X_{ij}). \] We have \[ c_{\mathrm{expected}} \leq c_{\mathrm{MAXCUT}} \leq c_{\mathrm{ub}}. \] We want to find the maximum possible \(\alpha\) such that \[ \alpha c_{\mathrm{ub}} \leq c_{\mathrm{expected}} \leq c_{\mathrm{MAXCUT}} \leq c_{\mathrm{ub}}, \] so that \(\alpha\) acts to be the best approximation ratio. To find such \(\alpha\), we need to find the maximum \(\alpha\) such that \[ \alpha (1 - t) \leq \frac{2}{\pi} \arccos(t), \forall t \in [-1,1]. \] The best possible \(\alpha\) is \(0.878\), see Fig. 3.2.

Figure 3.2: Best approximation ratio.

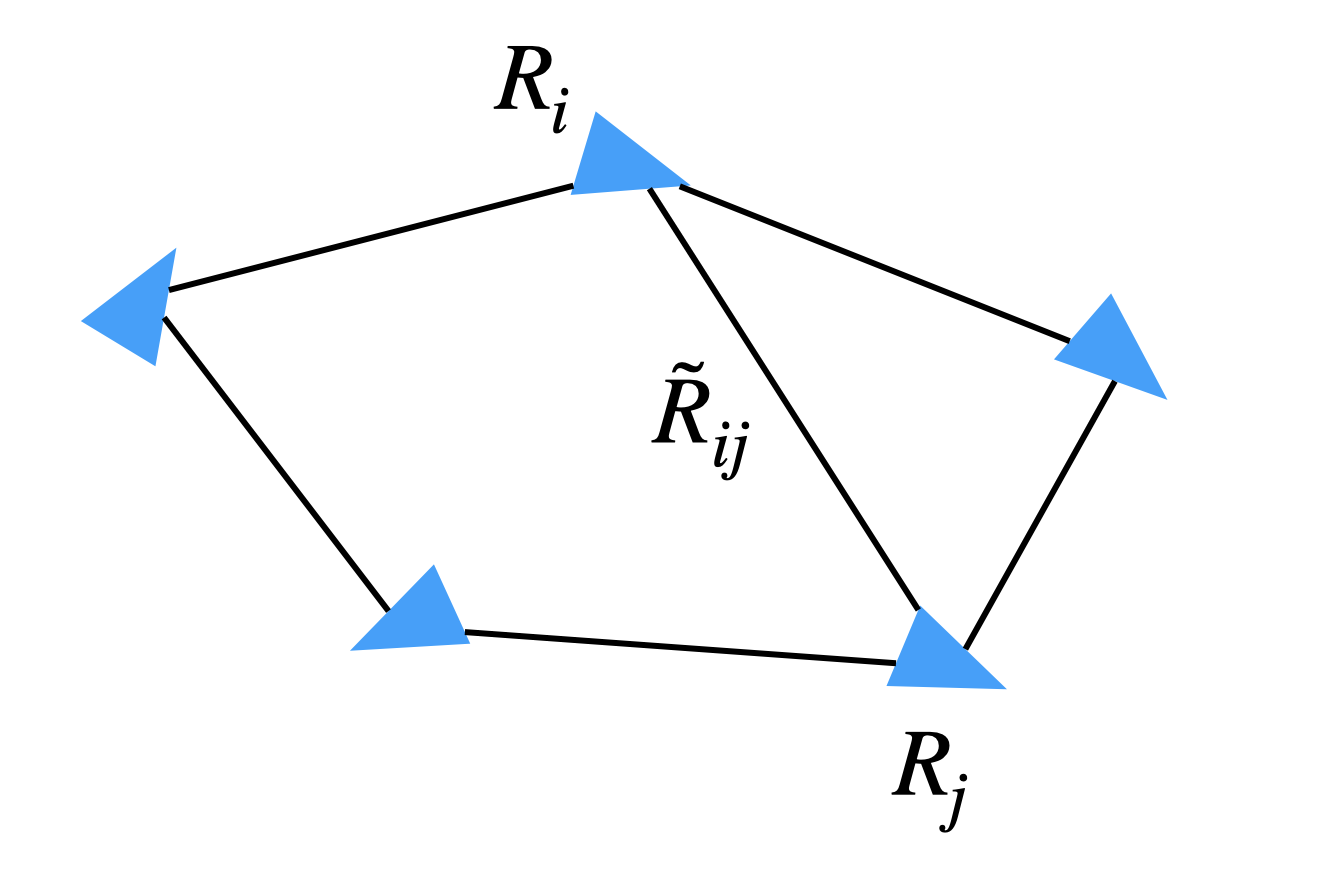

3.2 Certifiably Optimal Rotation Averaging

Consider a graph \(\mathcal{G}= (\mathcal{V},\mathcal{E})\) with node set \(\mathcal{V}= [N]\) and edge set \(\mathcal{E}= \{(i,j) \mid i,j \in \mathcal{V}\}\). Each node \(i\) is associated with an unknown rotation matrix \(R_i \in \mathrm{SO}(3)\), and each edge is associated with a relative rotation \[\begin{equation} \tilde{R}_{ij} = R_i^\top R_j \cdot R_{\epsilon}, \tag{3.9} \end{equation}\] that measures the relative rotation between \(R_i\) and \(R_j\), up to some small noise corruption \(R_{\epsilon} \in \mathrm{SO}(3)\). See Fig. 3.3.

The goal of (multiple) rotation averaging is to estimate the absolute rotations \(\{R_i \}_{i=1}^N\) given the noisy relative rotation measurements on the edges \(\{\tilde{R}_{ij} \}_{(i,j) \in \mathcal{E}}\). This problem is also known as rotation synchronization and it finds applications in computer vision (Eriksson et al. 2018), robotics (Rosen et al. 2019), and medical imaging (L. Wang and Singer 2013).

Figure 3.3: Rotation Averaging.

To synchronize the absolute rotations from relative measurements, it is common practice to formulate the following optimization problem \[\begin{equation} \min_{R_i \in \mathrm{SO}(3), i\in \mathcal{V}} \sum_{(i,j) \in \mathcal{E}} \Vert \tilde{R}_{ij} - R_i^\top R_j \Vert_\mathrm{F}^2, \tag{3.10} \end{equation}\] to seek the best absolute rotations that fit the relative measurements according to the generative model (3.9). It can be shown that when the noise \(R_\epsilon\) satisfies a Langevin distribution, then problem (3.10) returns the maximum likelihood estimator. Even when the noise distribution is not Langevin, problem (3.10) often produces accurate estimates.

QCQP Formulation. We will first simplify problem (3.10) as a QCQP. Note that the objective is equivalent to \[ \sum_{(i,j) \in \mathcal{E}} \mathrm{tr}\left( (\tilde{R}_{ij} - R_i^\top R_j)^\top(\tilde{R}_{ij} - R_i^\top R_j) \right) = \sum_{(i,j) \in \mathcal{E}} \mathrm{tr}\left( 2 \mathrm{I}_3 - 2 \tilde{R}_{ij}^\top R_i^\top R_j \right). \] Therefore, problem (3.10) is equivalent to \[\begin{equation} \min_{R_i \in \mathrm{SO}(3), i\in\mathcal{V}} -2\sum_{(i,j)\in\mathcal{E}} \mathrm{tr}(\tilde{R}_{ij}^\top R_i^\top R_j). \tag{3.11} \end{equation}\] This is a QCQP because the objective is quadratic, and \(\mathrm{SO}(3)\) can be described by quadratic equality constraints.

Matrix Formulation. Let \[ R = \begin{bmatrix} R_1^\top\\ \vdots \\ R_N^\top \end{bmatrix} \in \mathrm{SO}(3)^N,\quad RR^\top= \begin{bmatrix} R_1^\top R_1 & R_1^\top R_2 & \cdots & R_1^\top R_N \\ R_2^\top R_1 & R_2^\top R_2 & \cdots & R_2^\top R_N \\ \vdots & \vdots & \ddots & \vdots \\ R_N^\top R_1 & R_N^\top R_2 & \cdots & R_N^\top R_N \end{bmatrix} \in \mathbb{S}^{3N}_{+} \] and \[\begin{equation} \tilde{R}= \begin{bmatrix} 0 & \tilde{R}_{12} & \cdots & \tilde{R}_{1N} \\ \tilde{R}_{12}^\top& 0 & \cdots & \tilde{R}_{2N}\\ \vdots & \vdots & \ddots & \vdots \\ \tilde{R}_{1N}^\top& \tilde{R}_{2N}^\top& \cdots & 0 \end{bmatrix} \in \mathbb{S}^{3N} \tag{3.12} \end{equation}\] Then problem (3.11) can be compactly written as \[\begin{equation} \min_{R \in \mathrm{SO}(3)^N} - \langle \tilde{R}, RR^\top \rangle \tag{3.13} \end{equation}\]

Semidefinite Relaxation. Problem (3.13) is nonconvex, so we apply semidefinite relaxation. Observe that, because \(R_i \in \mathrm{SO}(3)\), we have \[ R_i^\top R_i = \mathrm{I}_3, \forall i=1,\dots,N. \] Therefore, the diagonal blocks of \(RR^\top\) are all \(3\times 3\) identity matrices. We have also that \(RR^\top\succeq 0\) by construction. Therefore, the following SDP is a convex relaxation of problem (3.13) \[\begin{equation} \begin{split} \min_{X \in \mathbb{S}^{3N}} & \quad - \langle \tilde{R}, RR^\top \rangle \\ \mathrm{s.t.}& \quad X \succeq 0 \\ & \quad [X]_{ii} = \mathrm{I}_3, \quad i=1,\dots,N \end{split} \tag{3.14} \end{equation}\] where the last constraint in (3.14) enforces all diagonal blocks to be identity matrices.

How powerful is this SDP relaxation? You may think that it will be quite loose (and hence not very useful) because we have dropped many nonconvex constraints from the original QCQP to the convex SDP (e.g., \(\mathrm{rank}(X) = 3\), \(R_i \in \mathrm{SO}(3)\) but we only used \(R_i \in \mathrm{O}(3)\)), but empirically it is almost always exact.

Example 3.1 (Rotation Averaging) I will generate a fully connected graph \(\mathcal{G}\) with \(N\) nodes.

For each node \(i\), I associate a random 3D rotation matrix \(R_i\). For the first node, I always let \(R_1 = \mathrm{I}_3\).

For each edge \((i,j)\), I generate a noisy measurement \[ \tilde{R}_{ij} = R_i^\top R_j \cdot R_\epsilon, \] where \(R_\epsilon\) is a rotation matrix with a random rotation axis and a rotation angle uniformally distributed between \(0\) and \(\beta\) degrees.

After this graph is generated, I form the \(\tilde{R}\) matrix in (3.12) and solve the SDP (3.14). This can be easily programmed in Matlab:

X = sdpvar(3*n,3*n);

F = [X >= 0];

for i = 1:n

F = [F,

X(blkIndices(i,3),blkIndices(i,3)) == eye(3)];

end

obj = trace(-Rtld*X);

optimize(F,obj);

Xval = value(X);

f_sdp = value(obj);Note that f_sdp will be a lower bound.

Let \(X_\star\) be the optimal solution of the SDP. We know that, if the SDP relaxation is tight, then \(X_\star\) will look like \(RR^\top\). Because \(R_1 = \mathrm{I}_3\), we can directly read off the optimal rotation estimations from the first row of blocks. However, if the relaxation is not tight, the blocks there will not be valid rotation matrices, and we can perform a projection onto \(\mathrm{SO}(3)\). Using the estimated rotations, I can compute f_est, which is an upper bound to the true global optimum.

R_est = [];

R_errs = [];

for i = 1:n

if i == 1

Ri = eye(3);

else

Ri = project2SO3(Xval(1:3,blkIndices(i,3)));

end

R_errs = [R_errs, getAngularError(Ri, R_gt(blkIndices(i,3),:))];

R_est = [R_est, Ri];

end

X_est = R_est'*R_est;

f_est = trace(-Rtld*X_est);With f_est and f_sdp, an upper bound and a lower bound, I can compute the relative suboptimality gap \(\eta\). If \(\eta = 0\), then it certifies global optimality.

How does this work?

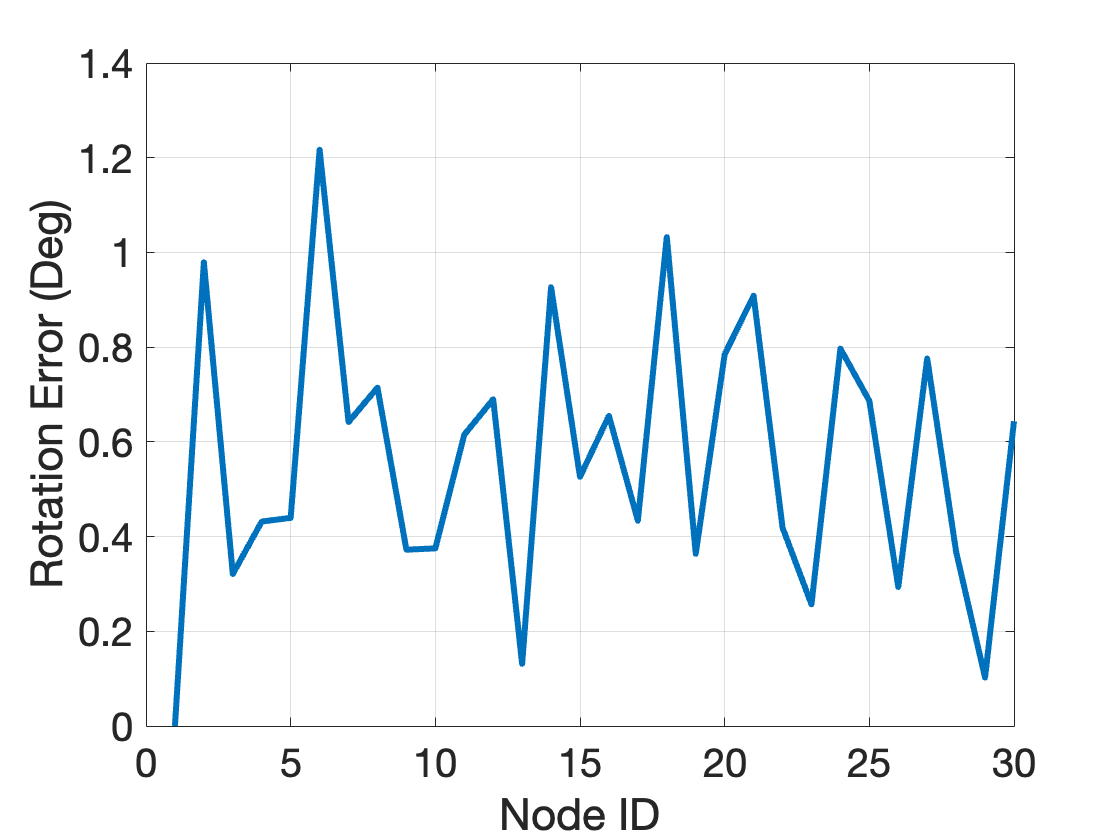

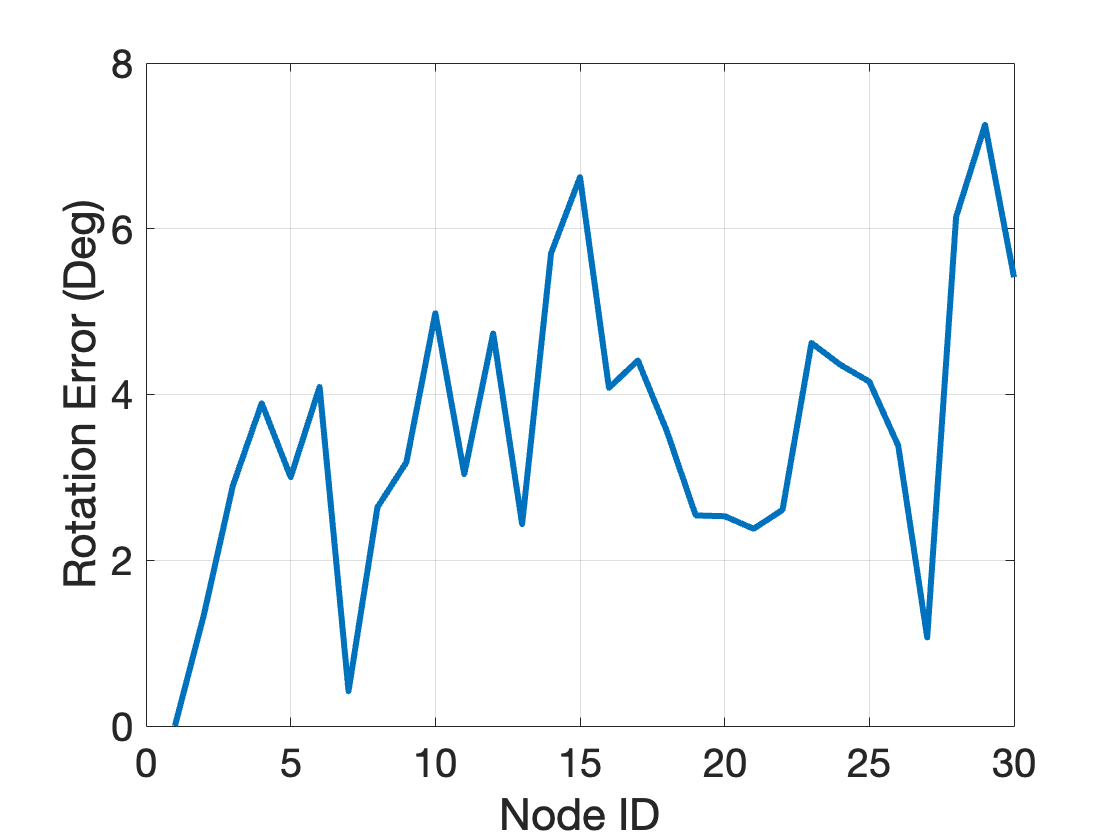

For \(N=30\) and \(\beta = 10\) (small noise), I got \(\eta = 6.26\times 10^{-13}\). Fig. 3.4 plots the rotation estimation errors at each node compared to the groundtruth rotations.

Figure 3.4: Rotation estimation errors, 30 nodes, noise bound 10 degrees.

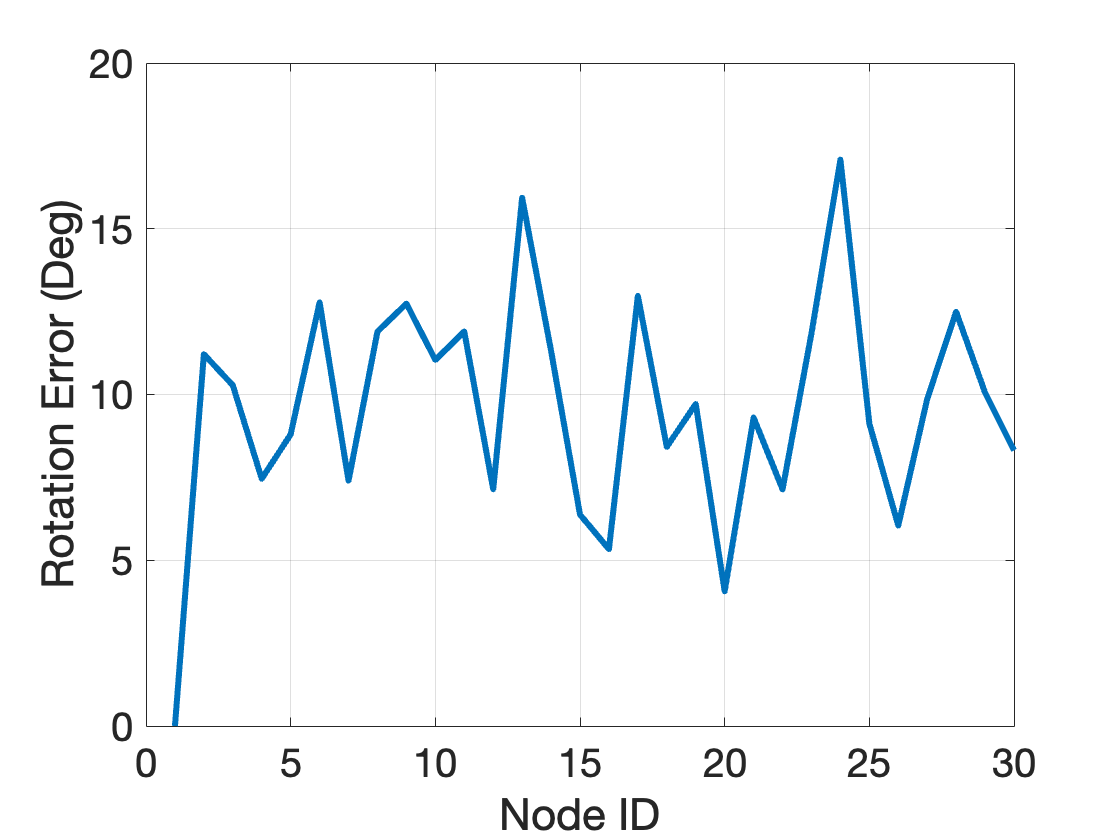

What if I increase the noise bound to \(\beta = 60\)? It turns out the relaxation is still exact with \(\eta = 6.84 \times 10^{-10}\)! Fig. 3.5 plots the rotation estimation errors at each node compared to the groundtruth rotations.

Figure 3.5: Rotation estimation errors, 30 nodes, noise bound 60 degrees.

What if I set \(\beta = 120\)? The relaxation still remains exact with \(\eta = 9.5 \times 10^{-10}\). Fig. 3.6 plots the rotation estimation errors at each node compared to the groundtruth rotations. Observe that even when the rotation estimates have large errors, they are still the certifiably optimal estimates.

Figure 3.6: Rotation estimation errors, 30 nodes, noise bound 120 degrees.

Play with the code yourself to appreciate the power of this simple SDP relaxation. You can, for example, increase the number of nodes \(N\). What if you make the graph sparse (e.g., fewer edges but still a connected graph)?

3.3 Stretch to High-Degree Polynomial Optimization

We have seen that Shor’s semidefinite relaxation for QCQPs can be derived both using Lagrangian duality, or simply by dropping a rank constraint, both of which are straightforward to understand. When applied to the MAXCUT problem, it produces a provably good approximation ratio. When applied to the rotation averaging problem, it directly gives us the optimal solution without any approximation, and the optimality comes with a certificate.

However, not every optimization problem is a QCQP, right? Is it possible to generalize Shor’s semidefinite relaxation to higher-degree polynomial optimization problems? As we will see in the next Chapters, the moment and sums-of-squares (SOS) hierarchy delivers the perfect and principled generalization.

Before going to the moment-SOS hierarchy, let me give you an example of a quartic (degree-4) optimization problem, for which we can still use Shor’s relaxation, albeit with some (in my opinion, not so elegant) mathematical massage. This example in fact is from a recent paper in computer vision (Briales, Kneip, and Gonzalez-Jimenez 2018).

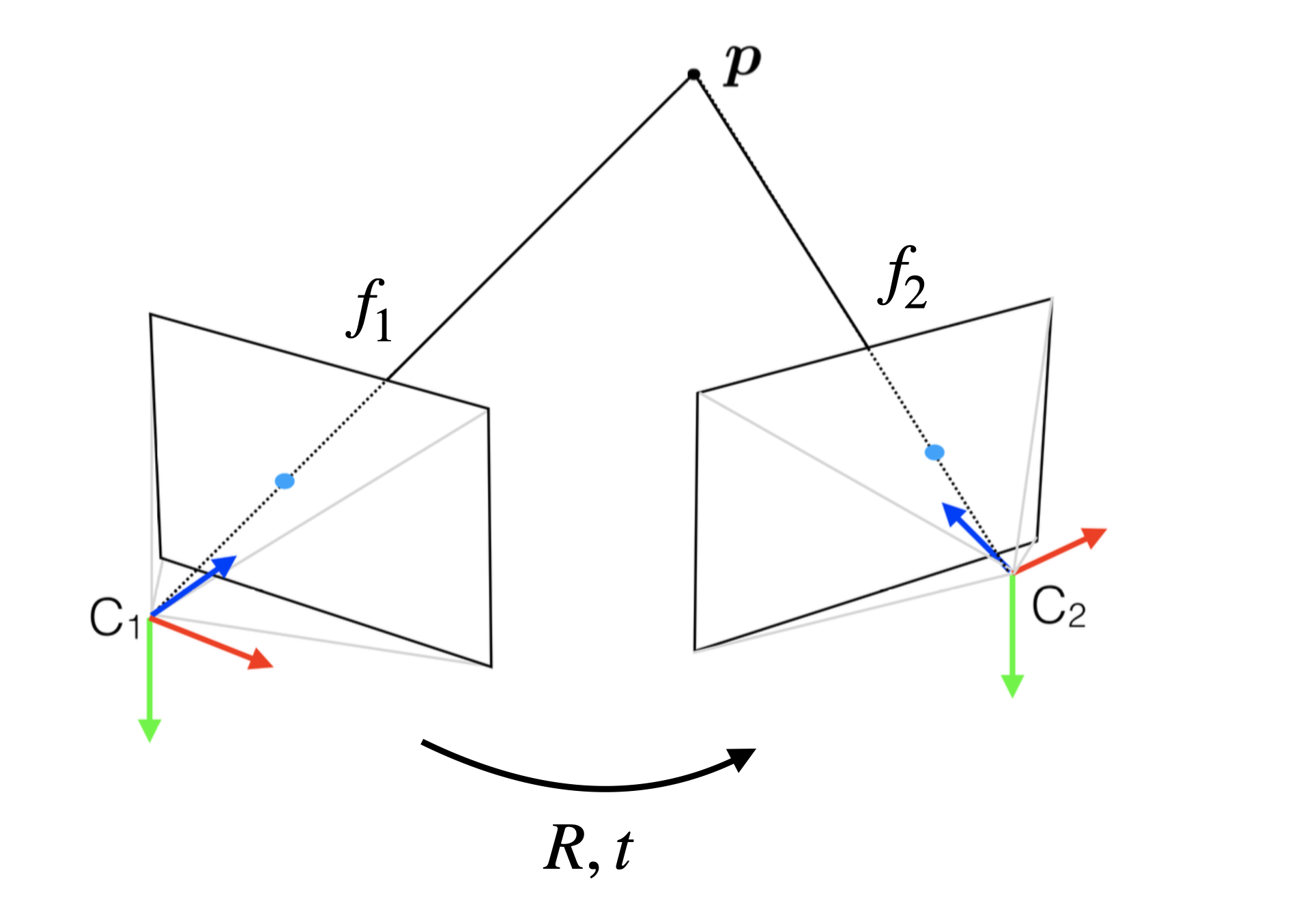

Two-view Geometry. Consider the problem of estimating the motion of a camera from two views illustrated in Fig. 3.7. Let \(C_1\) and \(C_2\) be two cameras (or the same camera but in two different positions) observing the same 3D point \(p \in \mathbb{R}^{3}\). The 3D point will be observed by \(C_1\) and \(C_2\) via its 2D projections on the image plane, respectively. Let \(f_1 \in \mathbb{R}^{3}\) and \(f_2 \in \mathbb{R}^{3}\) be the unit-length bearing vector that emanates from the camera centers to the 2D projections in two cameras, respectively. Our goal is to estimate the relative rotation and translation between the two cameras \(C_1\) and \(C_2\), denoted by \(R \in \mathrm{SO}(3)\) and \(t \in \mathbb{R}^{3}\). The pair of bearing vectors \((f_1,f_2)\) is typically known as a correspondence, or a match in computer vision.

Figure 3.7: Two-view Geometry.

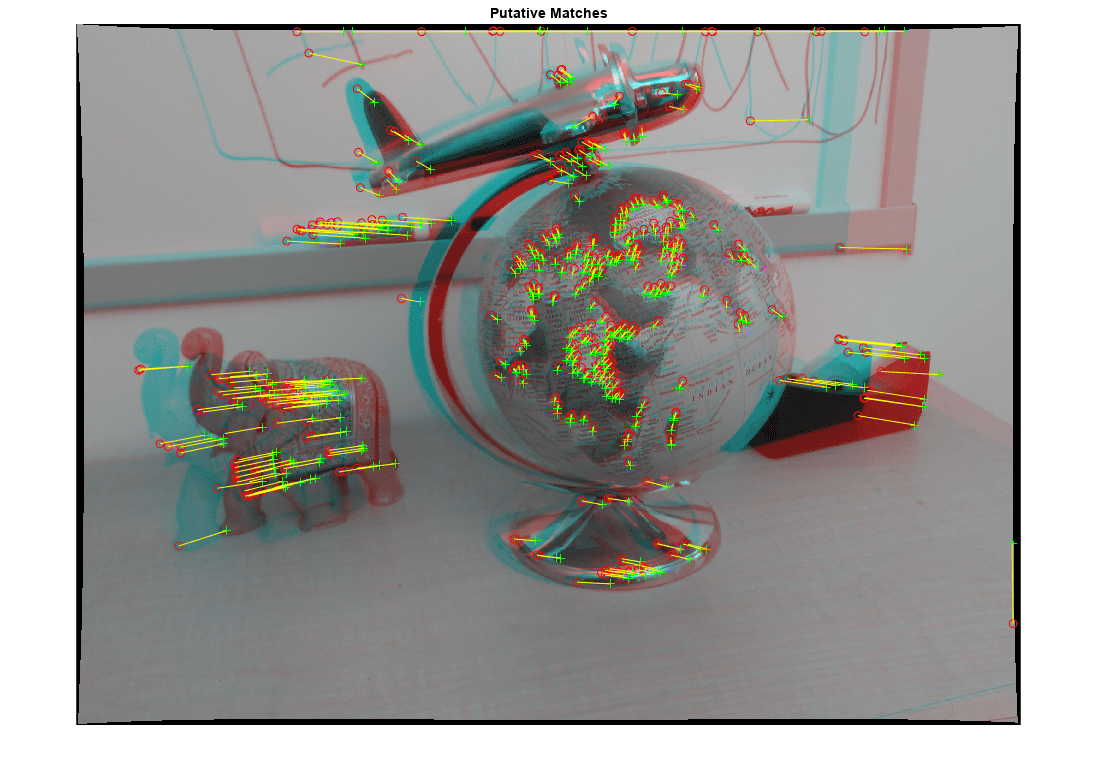

It turns out only having one correspondence is insufficient to recover \((R,t)\), and we need at least \(5\) such correspondences (Nistér 2004). See Fig. 3.8 for an example I adapted from Mathworks.

Figure 3.8: A real two-view motion estimation example. Copyright: Mathworks.

Consequently, to formally state our problem, we are given a set of \(N\) correspondences (these correspondences are typically detected by neural networks today (Q. Wang et al. 2020)) \[ \{f_{1,i},f_{2,i} \}_{i=1}^N \] between two images taken by two cameras, and our goal is to estimate the relative motion \((R,t)\).

Epipolar Constraint. When the correspondences are noise-free, it is known that they must satisfy the following epipolar constraint (Hartley and Zisserman 2003) \[\begin{equation} f_{2,i}^\top[t]_{\times} R f_{1,i} = 0, \quad \forall i=1,\dots,N, \tag{3.15} \end{equation}\] where \[ [t]_{\times} := \begin{bmatrix} 0 & - t_3 & t_2 \\ t_3 & 0 & - t_1 \\ - t_2 & t_1 & 0 \end{bmatrix} \] is a linear map such that \(t \times v = [t]_{\times} v\) for any \(v \in \mathbb{R}^{3}\) and \(\times\) denotes cross product in 3D.

Nonlinear Least Squares. Since the correspondences are detected by neural networks, they will be noisy and the epipolar constraint (3.15) will not be perfectly satisfied. Therefore, we formulate the following optimization problem to seek the best estimate that minimize the sum of the squared violations of (3.15) \[\begin{equation} \min_{R \in \mathrm{SO}(3), t \in \mathcal{S}^{2}} \sum_{i=1}^N (f_{2,i}^\top[t]_{\times} R f_{1,i})^2 \tag{3.16} \end{equation}\] Note that I have asked \(t \in \mathcal{S}^{2}\) to lie on the unit sphere, why?

Problem (3.16) is not a QCQP anymore, because its objective is a degree-4 polynomial. However, all the constraints of (3.16) are quadratic equalities and inequalities. To see this, note that \(t \in \mathcal{S}^{2}\) can be written as \[\begin{equation} 1 - t^\top t = 0, \tag{3.17} \end{equation}\] which is a quadratic polynomial equality. The constraint \(R \in \mathrm{SO}(3)\) can also be written as quadratic equalities. Let \[ R = [c_1, c_2, c_3] \] where \(c_i\) is the \(i\)-the column. Then \(R\in \mathrm{SO}(3)\) is equivalent to \[\begin{equation} \begin{split} c_i^\top c_i - 1= 0, & \quad i=1,2,3 \\ c_i^\top c_j = 0, & \quad (i,j) \in \{ (1,2),(2,3),(3,1) \} \\ c_i \times c_j = c_k,& \quad (i,j,k) \in \{ (1,2,3),(2,3,1),(3,1,2) \}. \end{split} \tag{3.18} \end{equation}\] all of which are quadratic polynomial equalities.

Let \(r = \mathrm{vec}(R)\), \(x = [r^\top, t^\top]^\top\in \mathbb{R}^{12}\), we will collectively call all the constraints in (3.17) and (3.18) as \[ h_k(x) = 0, k=1,\dots,l, \] with \(l=16\).

Semidefinite Relaxation. Clearly, we cannot directly apply Shor’s semidefinite relaxation, because \(X = xx^\top\) only contains monomials in \(x\) of degree up to \(2\), but our objective function is degree \(4\) – this matrix variable is not powerful enough.

To fix this issue, a natural idea is to create a larger matrix variable \[\begin{equation} \hspace{-16mm} v = \begin{bmatrix} 1 \\ r \\ t \\ t_1 r \\ t_2 r \\ t_3 r \end{bmatrix} \in \mathbb{R}^{40}, \quad X = vv^\top= \begin{bmatrix} 1 & * & * & * & * & * \\ r & rr^\top& * & * & * & * \\ t & tr^\top& tt^\top& * & * & * \\ t_1 r & t_1 rr^\top& t_1 r t^\top& t_1^2 rr^\top& * & * \\ t_2 r & t_2 rr^\top& t_2 r t^\top& t_1 t_2 rr^\top& t_2^2 rr^\top& * \\ t_3 r & t_3 rr^\top& t_3 r t^\top& t_3 t_1 rr^\top& t_3 t_2 rr^\top& t_3^2 rr^\top\end{bmatrix} \in \mathbb{S}^{40}_{+} \tag{3.19} \end{equation}\] Note that now \(X\) has degree-4 monomials, which allows me to write the objective of the original problem (3.16) as \[ \langle C, X \rangle \] with a suitable constant matrix \(C \in \mathbb{S}^{40}\).

I can also write all the original constraints \(h_k(x) = 0,k=1,\dots,l\) as \[ \langle A_k, X \rangle = 0, k=1,\dots,l, \] plus an additional constraint \[ \langle A_0, X \rangle = 1, \] where \(A_0\) is all zero except its top-left entry is equal to 1.

This seems to be all we need for Shor’s relaxation, will this work?

“Redundant” Constraints. In the original paper (Briales, Kneip, and Gonzalez-Jimenez 2018), the authors found that we are missing some important constraints that we can add to the convex SDP. For example, in the matrix \(X\), we have \[ t_1^2 rr^\top+ t_2^2 rr^\top+ t_3^2 rr^\top= (t_1^2 + t_2^2 + t_3^2) rr^\top= rr^\top, \] which gives us additional linear constraints on the entries of \(X\) (for free)! Essentially, these constraints are generated by multiplying the original constraints \(h_k(x)\) by suitable monomials: \[ h_k(x) = 0 \Rightarrow h_k(x) \cdot \lambda(x) = 0, \] where \(\lambda(x)\) is a monomial such that all the monomials of \(h_k(x) \cdot \lambda(x)\) appear in the big matrix \(X\) (so that the resulting equality constraint can still be written as a linear equality on \(X\)). The authors of (Briales, Kneip, and Gonzalez-Jimenez 2018) enumerated all such constraints (by hand) and added them to the SDP relaxation, which led to the relaxation going from loose to tight/exact.

(Briales, Kneip, and Gonzalez-Jimenez 2018) called these constraints “redundant”, are they? We will revisit this after we study the moment-SOS hierarchy!