Chapter 2 Semidefinite Optimization

2.1 Positive Semidefinite Matrices

A real matrix \(A = (A_{ij}) \in \mathbb{R}^{n \times n}\) is symmetric if \(A = A^\top\), i.e., \(A_{ij} = A_{ji}\) for all \(i,j\). Let \(\mathbb{S}^{n}\) be the space of all real symmetric matrices.

Any symmetric matrix \(A\) defines a quadratic form \(x^\top A x\). A matrix \(A\) is said to be positive semidefinite (PSD) if and only if its associated quadratic form is nonnegative, i.e., \[ x^\top A x \geq 0, \quad \forall x \in \mathbb{R}^{n}. \] We use \(\mathbb{S}^{n}_{+}\) to denote the set of \(n\times n\) PSD matrices. We also write \(A \succeq 0\) to denote positive semidefiniteness when the dimension is clear.

There are several equivalent characterizations of positive semidefiniteness.

Lemma 2.1 (Positive Semidefinite Matrices) Let \(A \in \mathbb{S}^{n}\) be a symmetric matrix, the following statements are equivalent:

A is positive semidefinite.

\(x^\top A x \geq 0, \forall x \in \mathbb{R}^{n}\).

All eigenvalues of \(A\) are nonnegative.

All \(2^n-1\) principal minors of \(A\) are nonnegative.

The coefficients of \(p_A(\lambda)\) weakly alternate in sign, i.e., \((-1)^{n-k} p_k \geq 0\) for \(k=0,\dots,n-1\), where \(p_A(\lambda) = \det (A - \lambda \mathrm{I}_n)\) is the characteristics polynomial of \(A\).

There exists a factorization \(A = BB^\top\), where \(B \in \mathbb{R}^{n \times r}\) with \(r\) the rank of \(A\).

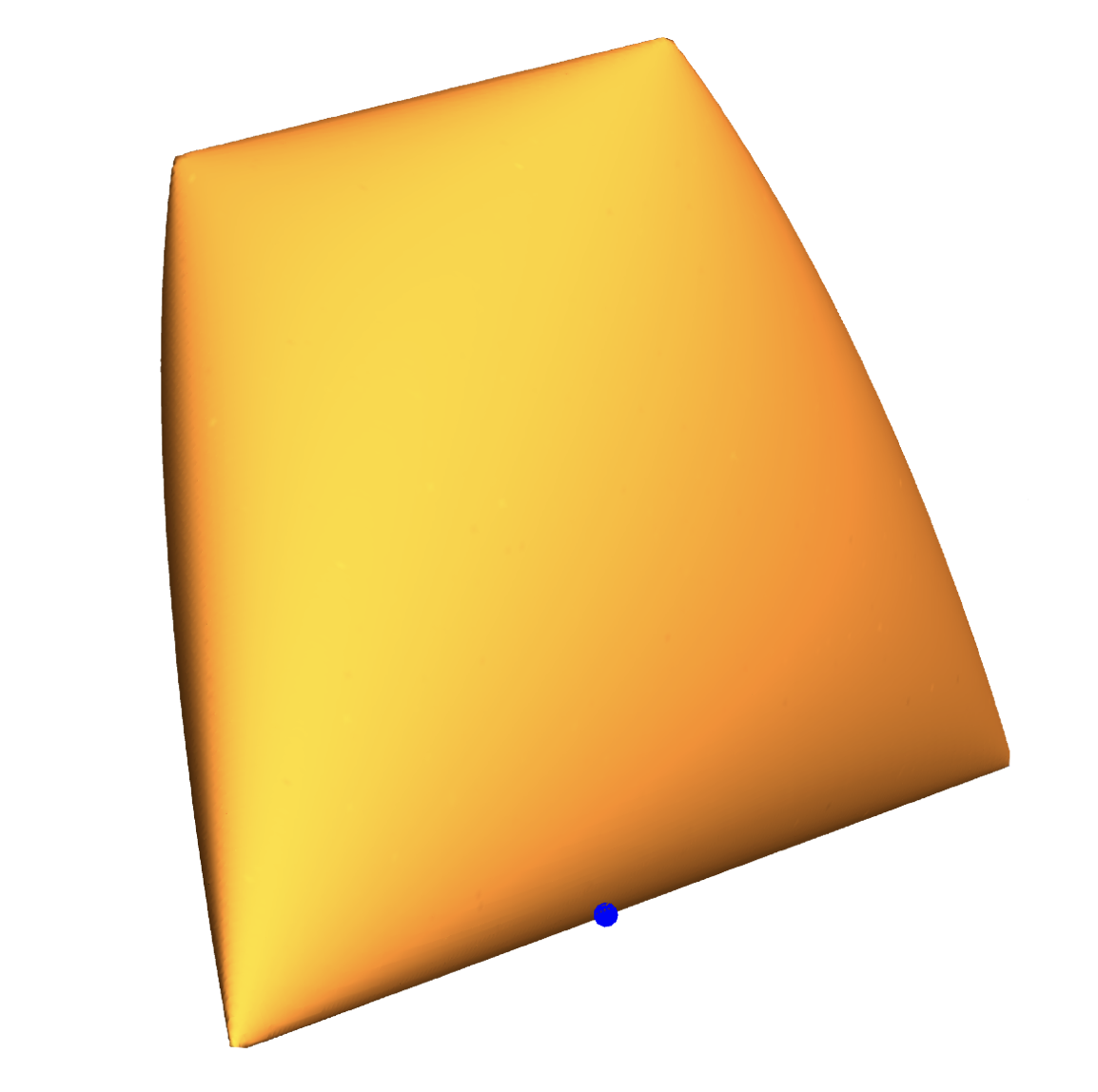

Among the equivalent characterizations of PSD matrices, (5) is less well-known, but it can be very useful when we want to convert a PSD constraint into multiple scalar constraints. For example, consider the following subset of \(\mathbb{R}^{3}\): \[ \left\{ z \in \mathbb{R}^{3} \ \middle\vert\ X(z) = \begin{bmatrix} 1 & z_1 & z_2 \\ z_1 & z_2 & z_3 \\ z_2 & z_3 & 5 z_2 - 4 \end{bmatrix} \succeq 0 \right\} . \] We can first form the characteristic polynomial of \(X(z)\) –whose coefficients will be functions of \(z\)– and then invoking (5) to obtain a finite number scalar inequality constraints. We can then pass these scalar constraints to Mathematica and plot the set as in the following figure (Yang et al. 2022).

Figure 2.1: An example spectrahedron.

Similarly, we say a matrix \(A \in \mathbb{S}^{n}\) is positive definite (PD) is its associated quadratic form is always positive, i.e., \[ x^\top A x > 0, \quad \forall x \in \mathbb{R}^{n}. \] We use \(\mathbb{S}^{n}_{++}\) to denote the set of \(n \times n\) PD matrices, and also write \(A \succ 0\) when the dimension is clear.

Below is set of equivalent characterizations of positive definite matrices.

Lemma 2.2 (Positive Definite Matrices) Let \(A \in \mathbb{S}^{n}\) be a symmetric matrix, the following statements are equivalent:

A is positive definite.

\(x^\top A x > 0, \forall x \in \mathbb{R}^{n}\).

All eigenvalues of \(A\) are strictly positive.

All \(n\) leading principal minors of \(A\) are strictly positive.

The coefficients of \(p_A(\lambda)\) strictly alternate in sign, i.e., \((-1)^{n-k} p_k > 0\) for \(k=0,\dots,n-1\), where \(p_A(\lambda) = \det (A - \lambda \mathrm{I}_n)\) is the characteristics polynomial of \(A\).

There exists a factorization \(A = BB^\top\) with \(B\) square and nonsingular (full-rank).

Schur Complements. A useful technique to check whether a matrix is positive (semi-)definite is to use the Schur Complements. Consider a block-partitioned matrix \[\begin{equation} M = \begin{bmatrix} A & B \\ B^\top& C \end{bmatrix}, \tag{2.1} \end{equation}\] where \(A\) and \(C\) are symmetric matrices. If \(A\) is invertible, then the Schur complement of \(A\) is \[ M / A = C - B^\top A^{-1}B. \] Similarly, if \(C\) is invertible, then the Schur complement of \(C\) is \[ M / C = A - B C^{-1}B^\top. \]

We have the following result relating the Schur Complements to positive (semi-)definiteness.

Proposition 2.1 (Schur Complements and PSD) Consider the block-partitioned matrix \(M\) in (2.1),

\(M\) is positive definite if and only if both \(A\) and \(M/A\) are positive definite: \[ M \succ 0 \Leftrightarrow A \succ 0, M/A = C - B^\top A^{-1}B \succ 0. \]

\(M\) is positive definite if and only if both \(C\) and \(M/C\) are positive definite: \[ M \succ 0 \Leftrightarrow C \succ 0, M/C = A - B C^{-1}B^\top\succ 0. \]

If \(A\) is positive definite, then \(M\) is positive semidefinite if and only if \(M/A\) is positive semidefinite: \[ \text{If } A \succ 0, \text{ then } M \succeq 0 \Leftrightarrow M / A \succeq 0. \]

If \(C\) is positive definite, then \(M\) is positive semidefinite if and only if \(M/C\) is positive semidefinite: \[ \text{If } C \succ 0, \text{ then } M \succeq 0 \Leftrightarrow M / C \succeq 0. \]

2.1.1 Geometric Properties

The set \(\mathbb{S}^{n}_{+}\) is a proper cone (cf. Definition 1.3). Its interior is \(\mathbb{S}^{n}_{++}\). Under the inner product \[ \langle A, B \rangle = \mathrm{tr}(AB^\top), \quad A,B \in \mathbb{R}^{n \times n}, \] the PSD cone \(\mathbb{S}^{n}_{+}\) is self-dual.

Next we want to characterize the face of the PSD cone. We first present the following lemma which will turn out to be useful afterwards.

Lemma 2.3 (Range of PSD Matrices) Let \(A,B \in \mathbb{S}^{n}_{+}\), then we have \[\begin{equation} \mathrm{Range}(A) \subseteq \mathrm{Range}(A + B), \tag{2.2} \end{equation}\] where \(\mathrm{Range}(A)\) denotes the span of the column vectors of \(A\).

Proof. For any symmetric matrix \(S\), we know \[ \mathrm{Range}(S) = \mathrm{ker}(S)^{\perp}. \] Therefore, to prove (2.2), it is equivalent to prove \[ \mathrm{ker}(A) \supseteq \mathrm{ker}(A + B). \] Pick any \(u \in \mathrm{ker}(A + B)\), we have \[ (A + B) u = 0 \Rightarrow u ^\top(A + B) u = 0 \Rightarrow u^\top A u + u^\top B u = 0 \Rightarrow u^\top A u = u^\top B u = 0, \] where the last derivation is due to \(A, B \succeq 0\). Now that we have \(u^\top A u = 0\), we claim that \(Au = 0\) must hold, i.e., \(u \in \mathrm{ker}(A)\). To see this, write \[ u = \sum_{i=1}^n a_i v_i, \] where \(a_i = \langle u, v_i \rangle\) and \(v_i,i=1,\dots,n\) are the eigenvectors of \(A\) corresponding to eigenvalues \(\lambda_i,i=1,\dots,n\). Then we have \[ Au = \sum_{i=1}^n a_i A v_i = \sum_{i=1}^n a_i \lambda_i v_i, \] and \[ u^\top A u = \sum_{i=1}^n \lambda_i a_i^2 = 0. \] Since \(\lambda_i \geq 0, a_i^2 \geq 0\), we have \[ \lambda_i a_i^2 = 0, \forall i = 1,\dots,n. \] This indicates that if \(\lambda_i > 0\), then \(a_i = 0\). Therefore, \(a_i\) can only be nonzero for \(\lambda_i = 0\), which leads to \[ Au = \sum_{i=1}^n a_i \lambda_i v_i = 0. \] Therefore, \(u \in \mathrm{ker}(A)\), proving the result.

Lemma 2.3 indicates that if \(A \succeq B\), then \(\mathrm{Range}(B) \subseteq \mathrm{Range}(A)\). What about the reverse?

Lemma 2.4 (Extend Line Segment) Let \(A,B \in \mathbb{S}^{n}_{+}\), if \(\mathrm{Range}(B) \subseteq \mathrm{Range}(A)\), then there must exist \(C \in \mathbb{S}^{n}_{+}\) such that \[ A \in (B,C), \] i.e., the line segment from \(B\) to \(A\) can be extended past \(A\) within \(\mathbb{S}^{n}_{+}\).

Proof. Since \(\mathrm{Range}(B) \subseteq \mathrm{Range}(A)\), we have \[ \mathrm{ker}(A) \subseteq \mathrm{ker}(B). \] Now consider extending the line segment past \(A\) to \[ C_{\alpha} = A + \alpha(A - B) = (1+\alpha) A - \alpha B, \] with some \(\alpha > 0\). We want to show that there exists \(\alpha > 0\) such that \(C_{\alpha} \succeq 0\).

Pick \(u \in \mathbb{R}^{n}\), then either \(u \in \mathrm{ker}(B)\) or \(u \not\in \mathrm{ker}(B)\). If \(u \in \mathrm{ker}(B)\), then \[ u^\top C_{\alpha} u = (1+\alpha) u^\top A u - \alpha u^\top B u = (1+\alpha) u^\top A u \geq 0. \] If \(u \not\in \mathrm{ker}(B)\), then due to \(\mathrm{ker}(A) \subseteq \mathrm{ker}(B)\), we have \(u \not\in \mathrm{ker}(A)\) as well. As a result, we have \[\begin{equation} u^\top C_{\alpha} u = (1+\alpha) u^\top A u - \alpha u^\top B u = (1+\alpha) u^\top A u \lparen{ 1- \frac{\alpha}{1+\alpha} \frac{u^\top B u}{u^\top A u} }. \tag{2.3} \end{equation}\] Since \[ \max_{u: u \not\in \mathrm{ker}(A)} \frac{u^\top B u}{u^\top A u} \leq \frac{\lambda_{\max}(B)}{\lambda_{\min,>0}(A)}, \] where \(\lambda_{\min,>0}(A)\) denotes the minimum positive eigenvalue of \(A\), we can always choose \(\alpha\) sufficiently small to make (2.3) nonnegative. Therefore, there exists \(\alpha > 0\) such that \(C_{\alpha} \succeq 0\).

In fact, from Lemma 2.3 we can induce a corollary.

Corollary 2.1 (Range of PSD Matrices) Let \(A, B \in \mathbb{S}^{n}_{+}\), then we have \[ \mathrm{Range}(A + B) = \mathrm{Range}(A) + \mathrm{Range}(B), \] with “\(+\)” the Minkowski sum.

Exercise 2.1 Let \(A,B \in \mathbb{S}^{n}_{+}\), show that \(\langle A, B \rangle = 0\) if and only if \(\mathrm{Range}(A) \perp \mathrm{Range}(B)\).

For a subset \(T \subseteq \mathbb{S}^{n}_{+}\), we use \(\mathrm{face}(T,\mathbb{S}^{n}_{+})\) to denote the smallest face of \(\mathbb{S}^{n}_{+}\) that contains \(T\). We first characterize the smallest face that contains a given PSD matrix, i.e., \(\mathrm{face}(A,\mathbb{S}^{n}_{+})\) for \(A\succeq 0\). Clearly, if \(A\) is PD, then \(\mathrm{face}(A, \mathbb{S}^{n}_{+}) = \mathbb{S}^{n}_{+}\) is the entire cone. If \(A\) is PSD but singular with rank \(r < n\), then \(A\) has the following spectral decomposition \[ Q^\top A Q = \begin{bmatrix} \Lambda & 0 \\ 0 & 0 \end{bmatrix}, \] where \(\Lambda \in \mathbb{S}^{r}_{++}\) is a diagonal matrix with the \(r\) nonzero eigenvalues of \(A\), and \(Q \in \mathrm{O}(n)\) is orthogonal. If \[ A = \lambda B + (1-\lambda)C, \quad B,C \in \mathbb{S}^{n}_{+},\lambda \in (0,1), \] then multiplying both sides by \(Q^\top\) and \(Q\) we have \[ \begin{bmatrix} \Lambda & 0 \\ 0 & 0 \end{bmatrix} = Q^\top A Q = \lambda Q^\top B Q + (1-\lambda) Q^\top C Q. \] Therefore, it must hold that \[ Q^\top B Q = \begin{bmatrix} B_1 & 0 \\ 0 & 0 \end{bmatrix}, \quad Q^\top C Q = \begin{bmatrix} C_1 & 0 \\ 0 & 0 \end{bmatrix}, \quad B_1 \in \mathbb{S}^{r}_{+}, C_1 \in \mathbb{S}^{r}_{+}, \] which is equivalent to \[ B = Q \begin{bmatrix} B_1 & 0 \\ 0 & 0 \end{bmatrix} Q^\top, C = Q \begin{bmatrix} C_1 & 0 \\ 0 & 0 \end{bmatrix} Q^\top, \quad B_1 \in \mathbb{S}^{r}_{+}, C_1 \in \mathbb{S}^{r}_{+}. \] We conclude that \(\mathrm{face}(A,\mathbb{S}^{n}_{+})\) must contain the set \[\begin{equation} G:= \left\{ Q \begin{bmatrix} X & 0 \\ 0 & 0 \end{bmatrix} Q^\top\ \middle\vert\ X \in \mathbb{S}^{r}_{+} \right\} . \tag{2.4} \end{equation}\]

Exercise 2.2 Show that \(G\) in (2.4) is a face of \(\mathbb{S}^{n}_{+}\), i.e., (i) \(G\) is convex; (ii) \(u \in (x,y), u \in G, x,y \in \mathbb{S}^{n}_{+} \Rightarrow x,y \in G\).

As a result, we have \(\mathrm{face}(A,\mathbb{S}^{n}_{+}) = G\).

More general faces of the PSD cone \(\mathbb{S}^{n}_{+}\) can be characterized as follows (Theorem 3.7.1 in (Wolkowicz, Saigal, and Vandenberghe 2000)).

Theorem 2.1 (Faces of the PSD Cone) A set \(F \subseteq \mathbb{S}^{n}_{+}\) is a face if and only if there exists a subspace \(L \subseteq \mathbb{R}^{n}\) such that \[ F = \{ X \in \mathbb{S}^{n}_{+} \mid \mathrm{Range}(X) \subseteq L \}. \]

Proof. It is easy to prove the “If” direction using Lemma 2.3.

First we show \(F\) is convex. Pick \(A,B \in F\). We have \(\mathrm{Range}(A) \subseteq L\) and \(\mathrm{Range}(B) \subseteq L\). Let \(v_1,\dots,v_m\) be a set of basis spanning \(L\). We have that, for any \(u \in \mathbb{R}^{n}\), \[\begin{equation} \begin{split} A u \in L & \Rightarrow Au = \sum_{i=1}^m a_i v_i, \\ B u \in L & \Rightarrow Bu = \sum_{i=1}^m b_i v_i. \end{split} \end{equation}\] So for any \(\lambda \in [0,1]\), we have \[ (\lambda A + (1-\lambda) B) u = \lambda Au + (1-\lambda) Bu = \sum_{i=1}^m (\lambda a_i + (1-\lambda) b_i ) v_i \in L, \] implying \(\lambda A + (1-\lambda) B \in F\) for any \(\lambda \in [0,1]\).

Now we show that: \[ X \in (A,B), X \in F, A,B \in \mathbb{S}^{n}_{+} \Rightarrow A, B \in F. \] From \(X = \lambda A + (1-\lambda) B\) for some \(\lambda \in (0,1)\), and invoking Lemma 2.3, we have \[\begin{equation} \begin{split} \mathrm{Range}(X) & = \mathrm{Range}(\lambda A + (1-\lambda) B) \supseteq \mathrm{Range}(\lambda A) = \mathrm{Range}(A) \\ \mathrm{Range}(X) & = \mathrm{Range}(\lambda A + (1-\lambda) B) \supseteq \mathrm{Range}((1-\lambda) B) = \mathrm{Range}(B). \end{split} \end{equation}\] Since \(\mathrm{Range}(X) \subseteq L\) due to \(X \in F\), we have \[ \mathrm{Range}(A) \subseteq L, \quad \mathrm{Range}(B) \subseteq L, \] leading to \(A,B \in F\).

The proof for the “Only If” direction can be found in Theorem 3.7.1 of (Wolkowicz, Saigal, and Vandenberghe 2000).

2.2 Semidefinite Programming

2.2.1 Spectrahedra

Recall the definition of a polyhedron in (1.12), i.e., a vector \(x\) constrained by finitely many linear inequalities. The feasible set of a Linear Program is a polyhedron.

Similarly, we define a spectrahedron as a set defined by finitely many linear matrix inequalities (LMIs). Spectrahedra are the feasible sets of Semidefinite Programs (SDPs).

A linear matrix inequality has the form \[ A_0 + \sum_{i=1}^m A_i x_i \succeq 0, \] where \(A_i \in \mathbb{S}^{n},i=0,\dots,m\) are given symmetric matrices. Correspondingly, a spectrahedron is defined by finitely many LMIs.

Definition 2.1 (Spectrahedron) A set \(S \subseteq \mathbb{R}^{m}\) is a spectrahedron if it has the form \[ S = \left\{ x \in \mathbb{R}^{m} \ \middle\vert\ A_0 + \sum_{i=1}^m x_i A_i \succeq 0 \right\} , \] for given symmetric matrices \(A_0,A_1,\dots,A_m \in \mathbb{S}^{n}\).

Note that there is no less of generality in defining a spectrahedron using a single LMI. For example, in the case of a set defined by two LMIs: \[ S = \left\{ x \in \mathbb{R}^{m} \ \middle\vert\ A_0 + \sum_{i=1}^m x_i A_i \succeq 0, B_0 + \sum_{i=1}^m x_i B_i \succeq 0 \right\} , A_i \in \mathbb{S}^{n}, B_i \in \mathbb{S}^{d}, \] we can compress the two LMIs into a single LMI by putting \(A_i\) and \(B_i\) along the diagonal: \[ S = \left\{ x \in \mathbb{R}^{m} \ \middle\vert\ \begin{bmatrix} A_0 & \\ & B_0 \end{bmatrix} + \sum_{i=1}^m x_i \begin{bmatrix} A_i & \\ & B_i \end{bmatrix} \succeq 0 \right\} . \]

Leveraging (5) of Lemma 2.1, we know that a PSD constraint is equivalent to weakly alternating signs of the characteristic polynomial of the given matrix. Therefore, a spectrahedron is defined by finitely many polynomial inequalities, i.e., a spectrahedron is a (convex) basic semialgebraic set, as seen in the following example (Blekherman, Parrilo, and Thomas 2012).

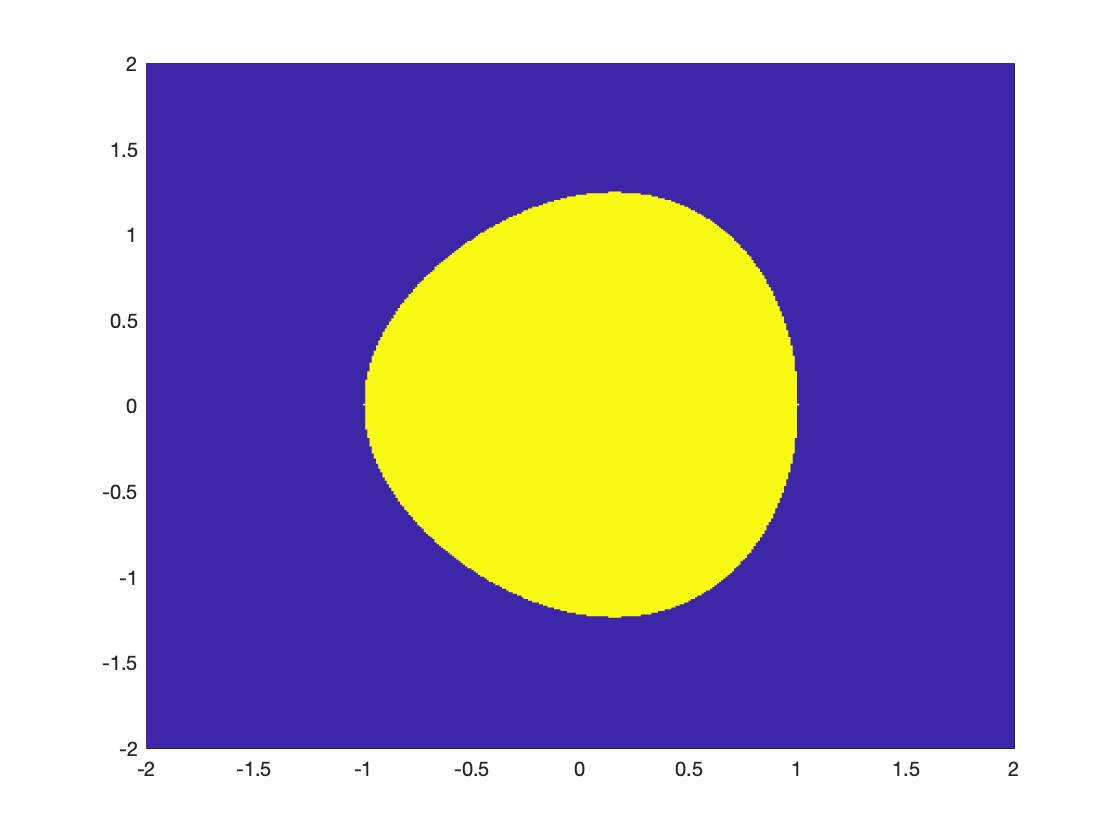

Example 2.1 (Elliptic Curve) Consider the spectrahedron in \(\mathbb{R}^{2}\) defined by \[ \left\{ (x,y) \in \mathbb{R}^{2} \ \middle\vert\ A(x,y) = \begin{bmatrix} x+1 & 0 & y \\ 0 & 2 & -x-1 \\ y & -x-1 & 2 \end{bmatrix} \succeq 0 \right\} . \] To obtain scalar inequalities defining the set, let \[ p_A(\lambda) = \det (\lambda I - A(x,y)) = \lambda^3 + p_2 \lambda^2 + p_1 \lambda + p_0 \] be the characteristic polynomial of \(A(x,y)\). \(A(x,y) \succeq 0\) is then equivalent to the coefficients weakly alternating in sign: \[\begin{equation} \begin{split} p_2 & = -(x+5) \leq 0, \\ p_1 & = -x^2 + 2x - y^2 + 7 \geq 0, \\ p_0 & = -(3+ x -x^3 -3x^2 - 2y^2) \leq 0. \end{split} \end{equation}\] We can use the following Matlab script to plot the set shown in Fig. 2.2. (The code is also available at here.) As we can see, the spectrahedron is convex, but it is not a polyhedron.

x = -2:0.01:2;

y = -2:0.01:2;

[X,Y] = meshgrid(x,y);

ineq = (-X - 5 <= 0) & ...

(-X.^2 + 2*X - Y.^2 + 7 >=0) & ...

(3 + X - X.^3 - 3*X.^2 - 2*Y.^2 >= 0);

h = pcolor(X,Y,double(ineq)) ;

h.EdgeColor = 'none' ;

Figure 2.2: Elliptic Curve.

We can use the same technique to visualize the elliptope, a spectrahedron that we will see again later when we study the MAXCUT problem.

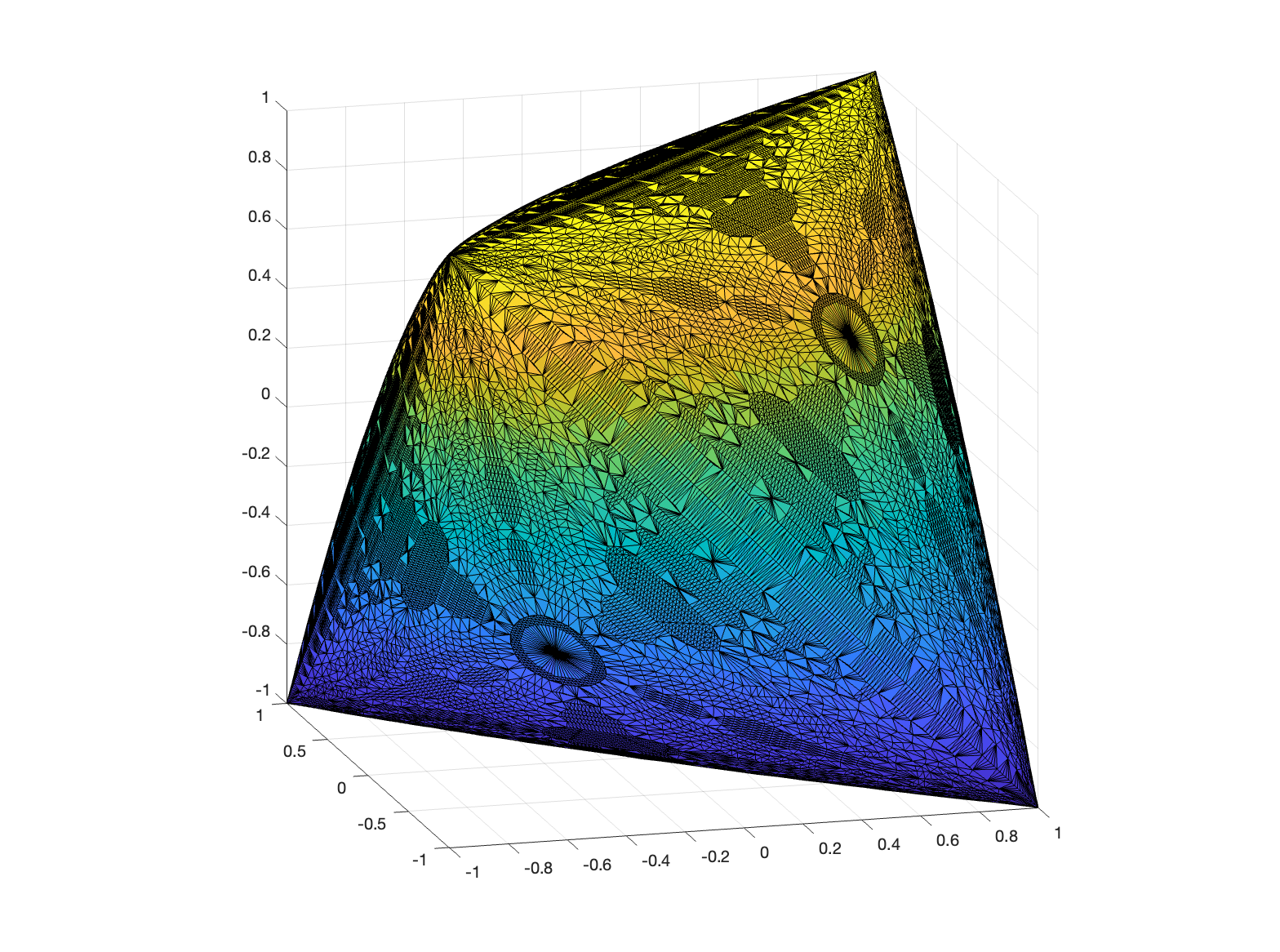

Example 2.2 (Elliptope) Consider the 3D elliptope defined by \[ \left\{ (x,y,z) \in \mathbb{R}^{3} \ \middle\vert\ A(x,y,z) = \begin{bmatrix} 1 & x & y \\ x & 1 & z \\ y & z & 1 \end{bmatrix} \succeq 0 \right\} . \] The characteristic polynomial of \(A(x,y,z)\) is \[ p_A(\lambda) = \lambda^3 - 3 \lambda^2 + (-x^2 - y^2 - z^2 + 3) \lambda + x^2 - 2 xyz + y^2 + z^2 -1. \] The coefficients need to weakly alternative in sign, we have the inequalities \[\begin{equation} \begin{split} -x^2 - y^2 - z^2 + 3 & \geq 0 \\ x^2 - 2 xyz + y^2 + z^2 -1 & \leq 0 \end{split} \end{equation}\]

Using the Matlab script here, we generate the following plot.

Figure 2.3: Elliptope.

Another example is provided in Fig. 2.1.

2.2.2 Formulation and Duality

Semidefinite programs (SDPs) are linear optimization problems over spectrahedra. A standard SDP in primal form is written as \[\begin{equation} \boxed{ \begin{split} p^\star = \min_{X \in \mathbb{S}^{n}} & \quad \langle C, X \rangle \\ \mathrm{s.t.}& \quad \mathcal{A}(X) = b, \\ & \quad X \succeq 0 \end{split} } \tag{2.5} \end{equation}\] where \(C \in \mathbb{S}^{n}\), \(b \in \mathbb{R}^{m}\), and the linear map \(\mathcal{A}: \mathbb{S}^{n} \rightarrow \mathbb{R}^{m}\) is defined as \[ \mathcal{A}(X) := \begin{bmatrix} \langle A_1, X \rangle \\ \vdots \\ \langle A_i, X \rangle \\ \langle A_m, X \rangle \end{bmatrix}. \] Recall that \(\langle C, X \rangle = \mathrm{tr}(CX)\). The feasible set of (2.5) is the intersection of the PSD cone (\(\mathbb{S}^{n}_{+}\)) and the affine subspace defined by \(\mathcal{A}(X) = b\).

Closely related to the primal SDP (2.5) is the dual problem \[\begin{equation} \boxed{ \begin{split} d^\star = \max_{y \in \mathbb{R}^{m}} & \quad \langle b, y \rangle \\ \mathrm{s.t.}& \quad C - \mathcal{A}^* (y) \succeq 0 \end{split} } \tag{2.6} \end{equation}\] where \(\mathcal{A}^{*}: \mathbb{R}^{m} \rightarrow \mathbb{S}^{n}\) is the adjoint map defined as \[ \mathcal{A}^*(y) := \sum_{i=1}^m y_i A_i. \] Observe how the primal-dual SDP pair (2.5)-(2.6) parallels the primal-dual LP pair (1.15)-(1.16).

Weak duality. We have a similar weak duality between the primal and dual. Pick any \(X\) that is feasible for the primal (2.5) and \(y\) that is feasible for the dual (2.6), we have \[ \boxed{\langle C, X \rangle - \langle b, y \rangle = \langle C, X \rangle - \langle \mathcal{A}(X), y \rangle = \langle C - \mathcal{A}^* (y), X \rangle \geq 0,} \] where the last inequality holds because both \(C - \mathcal{A}^*(y)\) and \(X\) are positive semidefinite. As a result, we have the weak duality \[ d^\star \leq p^\star. \]

Similar to the LP case, we will denote \(p^\star = +\infty\) if the primal is infeasible, \(p^\star = - \infty\) if the primal is unbounded below. We will denote \(d^\star = +\infty\) if the dual is unbounded above, and \(d^\star = -\infty\) if the dual is infeasible. We say the primal (or the dual) is solvable if it admits optimizers. We denote \(p^\star - d^\star\) as the duality gap.

Recall Theorem 1.9 states that in LP, if at least one of the primal and dual is feasible, then strong duality holds (i.e., \(p^\star = d^\star = \{\pm \infty, \text{finite} \}\)). Unfortunately, this does not carry over to SDPs. Let us provide several examples.

Example 2.3 (Failure of SDP Strong Duality) The first example, from (M. V. Ramana 1997), shows that even if both primal and dual are feasible, there could exist a nonzero duality gap. Consider the following SDP pair for some \(\alpha \geq 0\) \[ \begin{cases} \min_{X \in \mathbb{S}^{3}} & \alpha X_{11} \\ \mathrm{s.t.}& X_{22} = 0 \\ & X_{11} + 2 X_{23} = 1 \\ & \begin{bmatrix} X_{11} & X_{12} & X_{13} \\ * & X_{22} & X_{23} \\ * & * & X_{33} \end{bmatrix} \succeq 0 \end{cases}, \begin{cases} \max_{y \in \mathbb{R}^{2}} & y_2 \\ \mathrm{s.t.}& \begin{bmatrix} \alpha & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{bmatrix} \succeq \begin{bmatrix} y_2 & 0 & 0 \\ 0 & y_1 & y_2 \\ 0 & y_2 & 0 \end{bmatrix} \end{cases} \] To examine the primal feasible set, let us pick the bottom-right \(2\times 2\) submatrix of \(X\). The determinant of this submatrix needs to be nonnegative (due to (4) of Lemma 2.1): \[ X_{22} X_{33} - X_{23}^2 \geq 0. \] Because \(X_{22} = 0\), we have \(X_{23} = 0\) and hence \(X_{11} = 1\). Therefore, \(p^\star = \alpha\) is attained.

To examine the dual feasible set, pick the bottom-right \(2 \times 2\) submatrix of \[ \begin{bmatrix} \alpha - y_2 & 0 & 0 \\ 0 & - y_1 & -y_2 \\ 0 & -y_2 & 0 \end{bmatrix} \succeq 0, \] we have \(y_2 = 0\). As a result, \(d^\star = 0\), and strong duality fails.

The second example, from (Todd 2001), shows that the duality gap can even be infinite. Consider the primal-dual SDP \[ \begin{cases} \min_{X \in \mathbb{S}^{2}} & 0 \\ \mathrm{s.t.}& X_{11} = 0 \\ & X_{12} = 1 \\ & \begin{bmatrix} X_{11} & X_{12} \\ * & X_{22} \end{bmatrix} \succeq 0 \end{cases}, \begin{cases} \max_{y \in \mathbb{R}^{2}} & 2 y_2 \\ \mathrm{s.t.}& \begin{bmatrix} - y_1 & - y_2 \\ - y_2 & 0 \end{bmatrix} \succeq 0 \end{cases} \] Clearly, the primal is infeasible because \[ \begin{bmatrix} 0 & 1 \\ 1 & X_{22} \end{bmatrix} \] can never be PSD. So \(p^\star = + \infty\). The dual problem, however, is feasible. From the PSD constraint we have \(y_2 = 0\) and \(d^\star = 0\). Therefore, the duality gap is infinite.

The third example, from (Todd 2001), shows that even when the duality gap is zero, the primal or dual problem may not admit optimizers. Consider the primal-dual SDP \[ \begin{cases} \min_{X \in \mathbb{S}^{2}} & 2 X_{12} \\ \mathrm{s.t.}& - X_{11} = -1 \\ & - X_{22} = 0 \\ & \begin{bmatrix} X_{11} & X_{12} \\ * & X_{22} \end{bmatrix} \succeq 0 \end{cases}, \begin{cases} \max_{y \in \mathbb{R}^{2}} & - y_1 \\ \mathrm{s.t.}& \begin{bmatrix} y_1 & 1 \\ 1 & y_2 \end{bmatrix} \succeq 0 \end{cases} \] To examine the primal feasible set, we have \[ \begin{bmatrix} 1 & X_{12} \\ X_{12} & 0 \end{bmatrix} \succeq 0 \] implies \(X_{12} = 0\). Hence the primal feasible set only has one point and \(p^\star = 0\). The dual feasible set reads \[ y_1 y_2 \geq 1,\quad y_1 \geq 0, \quad y_2 \geq 0, \] and we want to minimize \(y_1\). Clearly, \(d^\star = 0\) but it is not attainable. Therefore, strong duality holds but the dual problem is not solvable.

A Matlab script that passes these three examples to SDP solvers can be found here.

The examples above are somewhat “pathological” and they show that SDPs in general can be more complicated that LPs. It turns out, with the addition of Slater’s condition, i.e., strict feasibility of the primal and dual, we can recover nice results parallel to those of LP.

Theorem 2.2 (SDP Strong Duality) Assume both the primal SDP (2.5) and the dual SDP (2.6) are strictly feasible, i.e., there exists \(X \succ 0\) such that \(\mathcal{A}(X)=b\) for the primal and there exists \(y \in \mathbb{R}^{m}\) such that \(C - \mathcal{A}^* (y) \succ 0\) for the dual, then strong duality holds, i.e., both problems are solvable and admit optimizers, and \(p^\star = d^\star\) equals to some finite number.

Further, a pair of primal-dual feasible points \((X,y)\) is optimal if and only if \[ \langle C, X \rangle = \langle b, y \rangle \Leftrightarrow \langle C - \mathcal{A}^* (y), X \rangle = 0 \Leftrightarrow (C - \mathcal{A}^* (y)) X = 0. \]

One can relax the requirement of both primal and dual being strictly feasible to only one of them being strictly feasible, and similar results would hold. Precisely, if the primal is bounded below and strictly feasible, then \(p^\star = d^\star\) and the dual is solvable. If the dual is bounded above and strictly feasible, then \(p^\star = d^\star\) and the primal is solvable (Nie 2023).

Example 2.4 (SDP Strong Duality) Consider the following primal-dual SDP pair \[ \begin{cases} \min_{X \in \mathbb{S}^{2}} & 2 X_{11} + 2 X_{12} \\ \mathrm{s.t.}& X_{11} + X_{22} = 1 \\ & \begin{bmatrix} X_{11} & X_{12} \\ * & X_{22} \end{bmatrix} \succeq 0 \end{cases}, \begin{cases} \max_{y \in \mathbb{R}^{}} & y \\ \mathrm{s.t.}& \begin{bmatrix} 2 - y & 1 \\ 1 & - y \end{bmatrix} \succeq 0 \end{cases} \] Choose \[ X = \begin{bmatrix} 0.5 & 0 \\ 0 & 0.5 \end{bmatrix} \succ 0 \] we see the primal is strictly feasible. Choose \(y = -1\), we have \[ \begin{bmatrix} 3 & 1 \\ 1 & 1 \end{bmatrix} \succ 0 \] and the dual is strictly feasible. Therefore, strong duality holds.

In this case, pick the pair of primal-dual feasible points \[ X^\star = \begin{bmatrix} \frac{2 - \sqrt{2}}{4} & - \frac{1}{2 \sqrt{2}} \\ - \frac{1}{2 \sqrt{2}} & \frac{2 + \sqrt{2}}{4} \end{bmatrix}, \quad y^\star = 1 - \sqrt{2}, \] we have \[ \langle C, X^\star \rangle = 1-\sqrt{2} = \langle b, y^\star \rangle, \] and both \(X^\star\) and \(y^\star\) are optimal.

2.3 Software for Conic Optimization

Linear optimization over the nonnegative orthant (\(\mathbb{R}^{n}_{+}\)), the second-order cone (\(\mathcal{Q}_{n}\)), and the positive semidefinite cone (\(\mathbb{S}^{n}_{+}\)) forms the foundation of modern convex optimization, commonly referred to as conic optimization. These three types of cones are self-dual, and there exist efficient algorithms to solve the convex optimization problems. Popular solvers include SDPT3, SeDuMi, MOSEK, and SDPNAL+.

In this section, we introduce how we should “talk to” the numerical solvers for conic optimization, i.e., how should we pass a mathematically written conic optimization to a numerical solver. Note that in many cases, this “transcription” can be done by programming packages such as CVX, CVXPY, YALMIP etc., but I think it is important to understand the standard interface of numerical solvers because (i) it reinforces our understanding of the mathematical basics, (ii) it gets us closer to designing custom numerical solvers for specific problems, (iii) if you are a heavy convex optimization user you will realize that many of the programming packages are not “efficient” in transcribing the original optimization problem (but they are indeed very general). I have had cases where solving the conic optimization takes a few minutes but transcribing the problem to the solver takes half an hour.

We will use the SeDuMi format as an example. Consider the following general linear convex optimization problem \[\begin{equation} \begin{split} \max_{y \in \mathbb{R}^{m}} & \quad b^\top y \\ \mathrm{s.t.}& \quad Fy = g \\ & \quad f \geq Gy \\ & \quad h_i^\top y + \tau_i \geq \Vert H_i y + p_i \Vert_2, i=1,\dots,r \\ & \quad B_{j,0} + \sum_{k=1}^m y_k B_{j,k} \succeq 0, j=1,\dots,s, \end{split} \tag{2.7} \end{equation}\] for given matrices and vectors \[ F \in \mathbb{R}^{\ell_1 \times m},G \in \mathbb{R}^{\ell_2 \times m},H_i \in \mathbb{R}^{l_i \times m}, B_{j,k} \in \mathbb{S}^{n_j}, h_i \in \mathbb{R}^{m}, p_i \in \mathbb{R}^{l_i}, \tau_i \in \mathbb{R}^{}, g \in \mathbb{R}^{\ell_1},f \in \mathbb{R}^{\ell_2}. \] Define the linear function \[ \phi(y):= \left(Fy, Gy, \begin{bmatrix} - h_1^\top y \\ - H_1 y \end{bmatrix}, \dots, \begin{bmatrix} - h_r^\top y \\ - H_r y \end{bmatrix}, - \sum_{k=1}^m y_k B_{1,k}, \dots,- \sum_{k=1}^m y_k B_{s,k} \right), \] which is a linear map from \(\mathbb{R}^{m}\) to the vector space of Cartesian products \[ V:= \mathbb{R}^{\ell_1} \times \mathbb{R}^{\ell_2} \times \mathbb{R}^{l_1+1} \times \dots \times \mathbb{R}^{l_r + 1} \times \mathbb{S}^{n_1}\times \dots \times \mathbb{S}^{n_s}. \] A vector \(X \in V\) can be written as a tuple \[ X = (x_1,x_2,\mathrm{x}_1,\dots,\mathrm{x}_r,X_1,\dots,X_s). \] Given another vector \(Y \in V\) \[ X = (y_1,y_2,\mathrm{y}_1,\dots,\mathrm{y}_r,Y_1,\dots,Y_s), \] the inner product between \(X\) and \(Y\) is defined as \[ \langle X, Y \rangle = \langle x_1, y_2 \rangle + \langle x_2, y_2 \rangle + \sum_{i=1}^r \langle \mathrm{x}_i, \mathrm{y}_i \rangle + \sum_{j=1}^s \langle X_j, Y_j \rangle. \]

Let \(\mathcal{K}\) be the Cartesian product of the free cone, the nonnegative orthant, the second-order cone, and the PSD cone \[ \mathcal{K}:= \mathbb{R}^{\ell_1} \times \mathbb{R}^{\ell_2}_{+} \times \mathcal{Q}_{l_1} \times \dots \times \mathcal{Q}_{l_r} \times \mathbb{S}^{n_1}_{+} \times \dots \times \mathbb{S}^{n_s}_{+}. \] Its dual cone is \[ \mathcal{K}^* = \{0\}^{\ell_1} \times \times \mathbb{R}^{\ell_2}_{+} \times \mathcal{Q}_{l_1} \times \dots \times \mathcal{Q}_{l_r} \times \mathbb{S}^{n_1}_{+} \times \dots \times \mathbb{S}^{n_s}_{+}. \] Note that all the cones there are self-dual except the free cone whose dual is the zero point. Then denote \[ C := \left( g,f,\begin{bmatrix} \tau_1 \\ p_1 \end{bmatrix},\dots,\begin{bmatrix} \tau_r \\ p_r \end{bmatrix},B_{1,0},\dots,B_{s,0} \right) \in V, \] we have that the original optimization (2.7) is simply the following dual conic problem \[\begin{equation} \max_{y \in \mathbb{R}^{m}} \{ b^\top y \mid C - \phi(y) \in \mathcal{K}^* \}. \tag{2.8} \end{equation}\] The linear map \(\phi(y)\) can be written as \[ \phi(y) = y_1 A_1 + \dots, y_m A_m \] for vectors \(A_1,\dots,A_m\) in the space \(V\). Therefore, the primal problem to (2.8) is \[\begin{equation} \min_{X \in V} \{ \langle C, X \rangle \mid \langle A_i, X \rangle=b_i,i=1,\dots,m,X \in \mathcal{K} \}. \tag{2.9} \end{equation}\]

Let us practice an example from (Nie 2023).

Example 2.5 (SeDuMi Example) Consider the following optimization problem as an instance of (2.7): \[\begin{equation} \begin{split} \max_{y \in \mathbb{R}^{3}} & \quad y_3 - y_1 \\ \mathrm{s.t.}& \quad y_1 + y_2 + y_3 = 3\\ & \quad -1 \geq -y_1 - y_2 \\ & \quad -1 \geq -y_2 - y_3 \\ & \quad y_1 + y_3 \geq \sqrt{(y_1-1)^2 + y_2^2 + (y_3 -1)^2} \\ & \quad \begin{bmatrix} 1 & y_1 & y_2 \\ y_1 & 2 & y_3 \\ y_2 & y_3 & 3 \end{bmatrix} \succeq 0 \end{split} \tag{2.10} \end{equation}\] Clearly we have \(b = (-1,0,1)\). The linear map \(\phi(y)\) is \[ \phi(y) = \left( y_1+y_2+y_3,\begin{bmatrix} -y_1 - y_2 \\ - y_2 - y_3 \end{bmatrix}, \begin{bmatrix} - y_1 - y_3 \\ -y_1 \\ -y_2 \\ -y_3 \end{bmatrix}, \begin{bmatrix} 0 & -y_1 & -y_2 \\ -y_1 & 0 & -y_3 \\ -y_2 & -y_3 & 0 \end{bmatrix} \right). \] The vector space \(V=\mathbb{R}^{} \times \mathbb{R}^{2} \times \mathbb{R}^{4} \times \mathbb{S}^{3}\), and the cone \(\mathcal{K}\) is \[ \mathcal{K}= \mathbb{R}^{} \times \mathbb{R}^{2}_{+} \times \mathcal{Q}_3 \times \mathbb{S}^{3}_{+}. \] The vectors \(A_1,A_2,A_3\) are \[\begin{equation} \begin{split} A_1 &= \left( 1,\begin{bmatrix}-1\\0\end{bmatrix}, \begin{bmatrix}-1\\-1\\0\\0\end{bmatrix},\begin{bmatrix}0&-1&0\\-1&0&0\\0&0&0\end{bmatrix} \right)\\ A_2 &= \left( 1,\begin{bmatrix}-1\\-1\end{bmatrix}, \begin{bmatrix}0\\0\\-1\\0\end{bmatrix},\begin{bmatrix}0&0&-1\\0&0&0\\-1&0&0\end{bmatrix} \right)\\ A_3 &= \left( 1,\begin{bmatrix}0\\-1\end{bmatrix}, \begin{bmatrix}-1\\0\\0\\-1\end{bmatrix},\begin{bmatrix}0&0&0\\0&0&-1\\0&-1&0\end{bmatrix} \right). \end{split} \end{equation}\] The vector \(C\) is \[ C = \left( 3,\begin{bmatrix}-1\\-1\end{bmatrix}, \begin{bmatrix}0\\-1\\0\\-1\end{bmatrix},\begin{bmatrix}1&0&0\\0&2&0\\0&0&3\end{bmatrix} \right). \]

To input this problem to SeDuMi, we only need to provide the data \((A,b,C)\) together with the description of the cones \(\mathcal{K}\).

% describe dimensions of the cones

K.f = 1; % free cone

K.l = 2; % nonnegative orthant

K.q = 4; % second order cone

K.s = 3; % psd cone

% provide A,b,c

c = [3,-1,-1,0,-1,0,-1,1,0,0,0,2,0,0,0,3];

b = [-1,0,1];

A = [

1,-1,0,-1,-1,0,0,0,-1,0,-1,0,0,0,0,0;

1,-1,-1,0,0,-1,0,0,0,-1,0,0,0,-1,0,0;

1,0,-1,-1,0,0,-1,0,0,0,0,0,-1,0,-1,0

];

% solve using sedumi

[xopt,yopt,info] = sedumi(A,b,c,K);Note that in providing \(A\), we vectorize each \(A_i\) and place it along the \(i\)-th row of the matrix \(A\). The above Matlab script gives us the optimal solution \[ y^\star = (0,1,2). \] To run the code, make sure you download SeDuMi and add that to your Matlab path.

2.4 Interior Point Algorithm

A nice property of semidefinite optimization is that it can be solved in polynomial time. The algorithm of choice for solving SDPs is called an interior point method (IPM), which is also what popular SDP solvers like SeDuMI, MOSEK, and SDPT3 implement under the hood.

Although it can be difficult to implement an SDP solver as efficient and robust as MOSEK (as it requires many engineering wisdom), it is surprisingly simple to sketch out the basic algorithmic framework, as it is based on Newton’s method for solving a system of nonlinear equations. Before I introduce you the basic algorithm, let me review two useful preliminaries.

Newton’s Method

Given a function \(f: \mathbb{R}^{} \rightarrow \mathbb{R}^{}\) that is continuously differentiable, Newton’s method is designed to find a root of \(f(x) = 0\). Given an initial iterate \(x^{(0)}\), Newton’s method works as follows \[ x^{(k+1)} = x^{(k)} - \frac{f(x^{(k)})}{f'(x^{(k)})}, \] where \(f'(x^{(k)})\) denotes the derivative of \(f\) at the current iterate \(x^{(k)}\). This simple algorithm is indeed (in my opinion) the most important foundation of modern numerical optimization (Nocedal and Wright 1999). Under mild conditions, Newton’s method has at least quadratic convergence rate, that is to say, if \(|x^{(k)} - x^\star| = \epsilon\), then \(|x^{(k+1)} - x^\star| = O(\epsilon^2)\). Of course, there exist pathological cases where even linear convergence is not guaranteed (e.g., when \(f'(x^\star) = 0\)).

Newton’s method can be generalized to find a point at which multiple functions vanish simultaneously. Given a function \(F: \mathbb{R}^{n} \rightarrow \mathbb{R}^{n}\) that is continuously differentiable, and an initial iterate \(x^{(0)}\), Newton’s method reads \[\begin{equation} x^{(k+1)} = x^{(k)} - J_F(x^{(k)})^{-1}F(x^{(k)}), \tag{2.11} \end{equation}\] where \(J_F(\cdot)\) denotes the Jacobian of \(F\). Iteration (2.11) is equivalent to \[\begin{equation} \begin{split} J_F(x^{(k)}) \Delta x^{(k)} & = - F(x^{(k)}) \\ x^{(k+1)} & = x^{(k)} + \Delta x^{(k)} \end{split} \tag{2.12} \end{equation}\] i.e., one first solves a linear system of equations to find an update direction \(\Delta x^{(k)}\), and then take a step along the direction.

As we will see, IPMs for solving SDPs can be interpreted as applying Newton’s method to the perturbed KKT optimality conditions.

We introduce another useful preliminary about the symmetric Kronecker product.

Symmetric Vectorization and Kronecker Product

Consider a linear operator on \(\mathbb{R}^{n \times n}\): \[\begin{equation} \begin{split} \mathbb{R}^{n \times n} & \rightarrow \mathbb{R}^{n \times n} \\ K & \mapsto N K M^\top \end{split} \tag{2.13} \end{equation}\] where \(N,M \in \mathbb{R}^{n \times n}\) are given square matrices. This linear map is equivalent to \[\begin{equation} \begin{split} \mathbb{R}^{n^2} & \rightarrow \mathbb{R}^{n^2} \\ \mathrm{vec}(K) & \mapsto (M \otimes N) \mathrm{vec}(K) \end{split} \tag{2.14} \end{equation}\] where \(\otimes\) denotes the usual kronecker product.

Symmetric vectorization and kronecker product is to generalize the above linear map on \(\mathbb{R}^{n \times n}\) to \(\mathbb{S}^{n}\).

Consider a linear operator on \(\mathbb{S}^{n}\): \[\begin{equation} \begin{split} \mathbb{S}^{n} & \rightarrow \mathbb{S}^{n} \\ K & \mapsto \frac{1}{2} \left( N K M^\top+ M K N^\top\right) \end{split} \tag{2.15} \end{equation}\] where \(N,M\in\mathbb{R}^{n\times n}\) are given square matrices. This linear map is equivalent to \[\begin{equation} \begin{split} \mathbb{R}^{n^{\Delta}} & \rightarrow \mathbb{R}^{n^{\Delta}} \\ \mathrm{svec}(K) & \mapsto (M \otimes_{\mathrm{s}}N) \mathrm{svec}(K) \end{split} \tag{2.16} \end{equation}\] where \[ n^{\Delta} = \frac{n(n+1)}{2} \] is the \(n\)-th triangle number, \(\mathrm{svec}(K)\) denotes the symmetric vectorization of \(K\) defined as \[ \mathrm{svec}(K) = \begin{bmatrix} K_{11} \\ \sqrt{2} K_{12} \\ \vdots \\ \sqrt{2} K_{1n} \\ K_{22} \\ \vdots \\ \sqrt{2} K_{2n} \\ \vdots \\ K_{nn} \end{bmatrix}, \] and \(M \otimes_{\mathrm{s}}N\) denotes the symmetric kronecker product. Note that we have \[ \langle A, B \rangle = \langle \mathrm{svec}(A), \mathrm{svec}(B) \rangle, \quad \forall A,B \in \mathbb{S}^{n}, \] and \[ M \otimes_{\mathrm{s}}N = N \otimes_{\mathrm{s}}M. \] To compute the symmetric kronecker product, see (Schacke 2004). The \(\otimes_{\mathrm{s}}\) function is readily implemented in software packages such as SDPT3.

The following property of the symmetric kronecker product is useful for us later.

Lemma 2.5 (Spectrum of Symmetric Kronecker Product) Let \(M,N \in \mathbb{S}^{n}\) be two symmetric matrices that commute, i.e., \(MN = NM\), and \(\alpha_1,\dots,\alpha_n\) and \(\beta_1,\dots,\beta_n\) be their eigenvalues with \(v_1,\dots,v_n\) a common basis of orthonormal eigenvectors. The \(n^\Delta\) eigenvalues of \(M \otimes_{\mathrm{s}}N\) are given by \[ \frac{1}{2}(\alpha_i \beta_j + \beta_i \alpha_j), \quad 1 \leq i \leq j \leq n, \] with the corresponding set of orthonormal eigenvectors \[ \begin{cases} \mathrm{svec}(v_i v_i^\top) & \text{if } i = j \\ \frac{1}{\sqrt{2}} \mathrm{svec}(v_i v_j^\top+ v_j v_i^\top) & \text{if } i < j \end{cases}. \]

It is easy to see that, if \(M,N\) are two PSD matrices that commute, then \(M \otimes_{\mathrm{s}}N\) is also PSD.

For more properties of symmetric vectorization and Kronecker product, see (Schacke 2004).

Now we are ready to sketch the interior-point algorithm.

2.4.1 The Central Path

Assuming strict feasibility, strong duality holds between the SDP primal (2.5) and dual (2.6), then \((X,y,Z)\) is primal-dual optimal if and only if they satisfy the following KKT optimality conditions \[\begin{equation} \begin{split} \mathcal{A}(X) = b, \quad X \succeq 0 \\ C - \mathcal{A}^*(y) - Z = 0, \quad Z \succeq 0 \\ \langle X, Z \rangle = 0 \Leftrightarrow XZ = 0 \end{split} \tag{2.17} \end{equation}\] The first idea in IPM is to relax the last condition in (2.17), i.e., zero duality gap, to a small positive gap, which leads to the notion of a central path.

Definition 2.2 (Central Path) A point \((X^\mu,y^\mu,Z^\mu)\) is said to lie on the central path if there exists \(\mu > 0\) such that \[\begin{equation} \begin{split} \mathcal{A}(X^\mu) = b, \quad X^\mu \succeq 0 \\ C - \mathcal{A}^*(y^\mu) - Z^\mu = 0, \quad Z^\mu \succeq 0 \\ X^\mu Z^\mu = \mu \mathrm{I} \end{split}, \tag{2.18} \end{equation}\] that is, \((X^\mu,y^\mu,Z^\mu)\) is primal and dual feasible, but attain a nonzero duality gap: \[ \langle C, X^\mu \rangle - \langle b, y^\mu \rangle = \langle C, X^\mu \rangle - \langle \mathcal{A}(X^\mu), y^\mu \rangle = \langle C - \mathcal{A}^*(y^\mu), X^\mu \rangle = \langle X^\mu, Z^\mu \rangle = n\mu. \]

The central path exists and is unique.

Theorem 2.3 states that the limit of the central path leads to a solution of the SDP. Therefore, the basic concept of IPM is to follow the central path and converge to the optimal solution.

2.4.2 The AHO Newton Direction

We will focus on the central path equation (2.18) and for simplicity of notation, we will rename \((X^\mu,y^\mu,Z^\mu)\) as \((X,y,Z)\). Discarding the positive semidefiniteness condition for now, we can view (2.18) as finding a root to the following function \[\begin{equation} F(X,y,Z) = \begin{pmatrix} \mathcal{A}^*(y) + Z - C \\ \mathcal{A}(X) - b \\ X Z - \mu \mathrm{I} \end{pmatrix} = 0. \tag{2.19} \end{equation}\] However, there is an issue with \(F\) defined as above. The input of the function \((X,y,Z)\) lives in \(\mathbb{S}^{n} \times \mathbb{R}^{m} \times \mathbb{S}^{n}\), but the output lives in \(\mathbb{S}^{n} \times \mathbb{R}^{m} \times \mathbb{R}^{n \times n}\) because \(XZ\) is not guaranteed to be symmetric (two symmetric matrices may not commute). Therefore, Newton’s method cannot be directly applied.

An easy way to fix this issue is to treat the input and output of \(F\) as both \(\mathbb{R}^{n \times n} \times \mathbb{R}^{m} \times \mathbb{R}^{n \times n}\). This could work, however, leads to nonsymmetric Newton iterates.

(Alizadeh, Haeberly, and Overton 1998) proposed a better idea – to equivalently rewrite (2.19) as \[\begin{equation} F(X,y,Z) = \begin{pmatrix} \mathcal{A}^*(y) + Z - C \\ \mathcal{A}(X) - b \\ \frac{1}{2}(X Z + Z X) - \mu \mathrm{I} \end{pmatrix} = 0. \tag{2.20} \end{equation}\]

The next proposition states that (2.20) and (2.19) are indeed equivalent.

Proposition 2.2 (Symmetrized Central Path) If \(X,Z \succeq 0\), then \[ XZ = \mu \mathrm{I}\Leftrightarrow XZ + ZX = 2 \mu \mathrm{I}. \]

Proof. The \(\Rightarrow\) direction is obvious. To show the “” direction, write \(X = Q \Lambda Q^\top\) with \(QQ^\top= \mathrm{I}\).

Now that the input and output domains of \(F\) in (2.20) are both \(\mathbb{S}^{n} \times \mathbb{R}^{m} \times \mathbb{S}^{n}\), we can apply Newton’s method to solving the system of equations. For ease of implementation, let us denote \[ x = \mathrm{svec}(X), \quad z = \mathrm{svec}(Z), \quad c = \mathrm{svec}(C), \] \[ A^\top= \begin{bmatrix} \mathrm{svec}(A_1) & \mathrm{svec}(A_2) & \cdots & \mathrm{svec}(A_m) \end{bmatrix} \] and rewrite (2.20) as \[\begin{equation} F(x,y,z) = \begin{pmatrix} A^\top y + z - c \\ A x - b \\ \frac{1}{2}\mathrm{svec}(XZ + ZX) - \mathrm{svec}(\mu \mathrm{I}) \end{pmatrix} = 0. \tag{2.21} \end{equation}\] The Jacobian of \(F\) reads \[\begin{equation} J_F = \begin{bmatrix} 0 & A^\top& \mathrm{I}\\ A & 0 & 0 \\ P & 0 & Q \end{bmatrix} \tag{2.22} \end{equation}\] where \[ P = Z \otimes_{\mathrm{s}}\mathrm{I}, \quad Q = X \otimes_{\mathrm{s}}\mathrm{I}, \] due to the symmetric kronecker product introduced before \[ \frac{1}{2}\mathrm{svec}(XZ + ZX) = (Z \otimes_{\mathrm{s}}\mathrm{I}) x = (X \otimes_{\mathrm{s}}\mathrm{I}) z. \] Let \[ r_d = c - A^\top y - z, \quad r_p = b - Ax, \quad r_c = \mathrm{svec}(\mu \mathrm{I}) - \frac{1}{2}\mathrm{svec}(XZ + ZX), \] be the dual, primal, and complementarity residuals, applying Newton’s method to (2.21) gives us the Newton direction as the solution to the following linear system \[\begin{equation} \begin{bmatrix} 0 & A^\top& \mathrm{I}\\ A & 0 & 0 \\ P & 0 & Q \end{bmatrix} \begin{bmatrix} \Delta x \\ \Delta y \\ \Delta z \end{bmatrix} = \begin{bmatrix} r_d \\ r_p \\ r_c \end{bmatrix}. \tag{2.23} \end{equation}\] One can directly form the block matrix in (2.23) and solve the linear system. However, leveraging the sparsity of the linear system, we can do better.

We can first use the third equation to eliminate \(\Delta z\): \[\begin{equation} \Delta z = Q^{-1}(r_c - P \Delta x). \tag{2.24} \end{equation}\] Then we can use the first equation to eliminate \(\Delta x\): \[\begin{equation} \Delta x = - P^{-1}Q r_d + P^{-1}r_c + P^{-1}Q A^\top\Delta y. \tag{2.25} \end{equation}\] Then we are left with a single equation of \(\Delta y\): \[\begin{equation} \underbrace{ A P^{-1}Q A^\top}_{M} \Delta y = r_p + A P^{-1}(Q r_d - r_c), \tag{2.26} \end{equation}\] which is called the Schur system.

2.4.3 Basic Algorithm

With the AHO Newton direction worked out above, we can formulate the basic primal-dual path following interior point algorithm.

Choose \(X,Z\) strictly feasible for (2.5) and (2.6), \(0 \leq \sigma < 1\), define \[ \mu = \sigma \frac{\langle X, Z \rangle}{n} \]

Step along the Newton direction \[ x \leftarrow x + \alpha \Delta x, \quad y \leftarrow y + \beta \Delta y, \quad z \leftarrow z + \beta \Delta z, \] where the step sizes \(\alpha, \beta\) are chosen as \[ \alpha = \min (1, \tau \hat{\alpha}), \quad \beta = \min (1, \tau \hat{\beta}) \] with \(\tau \in (0,1)\) and \(\hat{\alpha}, \hat{\beta}\) computed by \[\begin{equation} \hat{\alpha} = \sup \{ \bar{\alpha} \mid x + \bar{\alpha} \Delta x \succeq 0 \}, \quad \hat{\beta} = \sup \{ \bar{\beta} \mid z + \bar{\beta} \Delta z \succeq 0 \}. \tag{2.27} \end{equation}\]

To compute the maximum step sizes \(\hat{\alpha}\) and \(\hat{\beta}\) in (2.27), let \[ X = \mathrm{smat}(x), \quad \Delta X = \mathrm{smat}(\Delta x) \] be the reconstructed matrices from the corresponding symmetric vectorizations. We can first perform a Cholesky factorization of \(X\) \[ X = LL^\top. \] Then we perform a matrix similarity transformation \[ X + \bar{\alpha} \Delta X \mapsto L^{-1}(X + \bar{\alpha} \Delta X) L^{-\top} = \mathrm{I}+ \bar{\alpha} L^{-1}\Delta X L^{-\top}, \] which does not change the eigenvalues of \(X + \bar{\alpha} \Delta X\). Therefore, the maximum step size \(\hat{\alpha}\) is \[ \hat{\alpha}^{-1}= \lambda_{\max}(- L^{-1}\Delta X L^{-\top}), \] assuming \(\Delta X\) is not PSD.

2.4.4 Nondegeneracy

Since the algorithm above is an application of Newton’s method to the system of equations (2.21), the key to understand its asymptotic behavior is to analyze the Jacobian (2.22) at the optimal solution. If the Jacobian at the optimal solution is nonsingular, then one can show that the algorithm above has asymptotic quadratic convergence rate.

(Alizadeh, Haeberly, and Overton 1998) provided a sufficient condition for the Jacobian to be nonsingular, which is called nondegeneracy.

Definition 2.3 (Nondegeneracy) Let \((X,y,Z)\) be a solution to the SDP pair (2.5) and (2.6) and satisfies the KKT optimality conditions (2.17). In this case, \(X\) and \(Z\) can be simultaneously diagonalized \[ X = Q \mathrm{Diag}(\lambda_1,\dots,\lambda_n) Q^\top, \quad Z = Q \mathrm{Diag}(\omega_1,\dots,\omega_n)Q^\top \] by some orthogonal matrix \(Q\). We assume \[ \lambda_1, \geq \dots \geq \lambda_n, \quad \omega_1 \leq \dots \omega_n. \] Due to \(XZ = 0\), we have \[ \lambda_i \omega_i = 0,\quad i=1,\dots,n. \]

Let \(X\) have rank \(r\) with positive eigenvalues \(\lambda_1,\dots,\lambda_r\), and partition \(Q = [Q_1, Q_2]\) where the colums of \(Q_1\) are eigenvectors corresponding to \(\lambda_1,\dots,\lambda_r\). We say \((X,y,Z)\) satisfies the strict complementarity and primal and dual nondegeneracy conditions if the following hold:

(Strict Complementarity) \(\mathrm{rank}(Z) = n - r\).

(Primal Nondegeneracy) The matrices \[\begin{equation} \begin{bmatrix} Q_1^\top A_k Q_1 & Q_1^\top A_k Q_2 \\ Q_2^\top A_k Q_1 & 0 \end{bmatrix}, \quad k=1,\dots,m \tag{2.28} \end{equation}\] are linearly independent in \(\mathbb{S}^{n}\).

(Dual Nondegeneracy) The matrices \[\begin{equation} Q_1^\top A_k Q_1, \quad k=1,\dots,m \tag{2.29} \end{equation}\] span the space \(\mathbb{S}^{r}\).

When strict complementarity holds, primal nondegeneracy implies the dual optimal solution is unique, and dual nondegeneracy implies the primal optimal solution is unique.

Strict complementarity and primal-dual nondegeneracy also immediately imply, recalling \(r^{\Delta} = r(r+1)/2\), \[\begin{equation} r^{\Delta} \leq m \leq r^{\Delta} + r (n - r), \tag{2.30} \end{equation}\] where \(r^\Delta \leq m\) is due to (2.29) and \(m \leq r^{\Delta} + r (n - r)\) is due to (2.28).

From (2.30), we can understand that when \(m\) is very large, then primal nondegeneracy must fail and the dual solution must not be unique. This is a property we will see later when we study the moment-SOS hierarchy.

2.4.5 Generalization

The AHO Newton direction is obtained by symmetrizing the centrality condition \(XZ = \mu \mathrm{I}\) as \[ \frac{1}{2}(XZ + ZX) = \mu \mathrm{I}. \]

It turns out that is not the only way (and also not the most robust way) to symmetrize the centrality condition. A more popular symmetrization is due to (Monteiro 1997) and (Y. Zhang 1998), and is typically referred to as the Monteiro-Zhang family (Monteiro 1998). The basic idea is to symmetrize the centrality condition as \[\begin{equation} \frac{1}{2}(P XZ P^{-1}+ P^{-\top}XZ P^\top) = \mu \mathrm{I}. \tag{2.31} \end{equation}\] Clearly, the AHO direction is a special case of (2.31) with the choice \(P = \mathrm{I}\).

Two other most popular Newton directions are:

Nesterov-Todd (Todd, Toh, and Tütüncü 1998), where \(P\) is obtained by first solving \[ WZW = X, \] and then setting \[ P = W^{-1/2}. \] This is the Newton method implemented by SeDuMi.

HKM, which was proposed and analyzed by multiple authors (Helmberg et al. 1996), (Kojima, Shindoh, and Hara 1997), computes \[ P = Z^{1/2}. \] This is the Newton method implemented by SDPT3 and MOSEK.

2.5 Applications

2.5.1 Lyapunov Stability

One of the earliest and most important applications of semidefinite optimization is in the context of dynamical systems and control theory. The main reason is that it is possible to characterize the dynamical properties of a system (e.g., stability, contraction) in terms of algebraic statements such as the feasibility of specific forms of inequalities. We provide a simple example in the case of Lyapunov stability for linear dynamical systems. For a comprehensive overview, see (S. Boyd et al. 1994).

Consider a discrete-time linear dynamical system \[\begin{equation} x_{k+1} = A x_k,\quad k=0,\dots \tag{2.32} \end{equation}\] where \(x \in \mathbb{R}^{n}\) is called the state of the system, and \(k\) indexes the time. An important property that one wants to understand about the system (2.32) is whether it is stable, i.e., given an initial state \(x_0\), does \(x_k\) tend to zero as \(k\) tends to infinity.

It is well-known that \(x_k\) tends to zero for all initial conditions \(x_0\) if and only if \(|\lambda_i(A)| < 1, \forall i=1,\dots,n\), i.e., all the eigenvalues of the transition matrix \(A\) lies inside the unit circle.

Equivalently, one can also certify the stability of the system if a Lyapunov function \[\begin{equation} V(x_k) = x_k^\top P x_k \tag{2.33} \end{equation}\] is given that satisfies the following matrix inequalities.

Lemma 2.6 (Lyapunov Stability) Given \(A \in \mathbb{R}^{n \times n}\), the following statements are equivalent:

All eigenvalues of \(A\) lie strictly inside the unit circle, i.e., \(|\lambda_i(A)| < 1, i=1,\dots,n\)

There exists a matrix \(P \in \mathbb{S}^{n}\) such that \[ P \succ 0, \quad A^\top P A - P \prec 0. \]

Proof. 2 \(\Rightarrow\) 1: Let \(\lambda \in \mathbb{C}^{}\) and \(v \neq 0 \in \mathbb{C}^{n}\) be a pair of eigenvalue and eigenvector of \(A\) such that \(A v = \lambda v\). Since \(A^\top P A - P \prec 0\), we have \[ v^* (A^\top P A - P) v < 0 \Rightarrow (\lambda v)^* P (\lambda v) - v^* P v < 0 \Rightarrow (|\lambda|^2 - 1) (v^* P v) < 0. \] Since \(P \succ 0\), we have \(v^* P v > 0\). Hence, \(|\lambda|^2 - 1 < 0\) and \(|\lambda| < 1\).

1 \(\Rightarrow\) 2: Construct \[ P := \sum_{k=0}^{\infty} (A^k)^\top A^k. \] The sum converges by the condition that \(|\lambda_i(A)| < 1,\forall i\) and \(P \succ 0\). Then we have \[ A^\top P A - P = \sum_{k=1}^{\infty} (A^k)^\top A^k - \sum_{k=0}^{\infty} (A^k)^\top A^k = - \mathrm{I}\prec 0, \] proving the result.

Intuitively, the Lyapunov function (2.33) defines a positive definite energy function. The fact that \(P \succ 0\) implies \(V(0) = 0\) and \(V(x) > 0\) for any \(x\) that is nonzero. The condition \(A^\top P A - P \prec 0\) guarantees that the system’s energy strictly decreases along any possible system trajectory. To see this, write \[ V(x_{k+1}) - V(x_k) = V(Ax_k) - V(x_k) = x_k^\top(A^\top P A - P) x_k < 0, \forall x_k \neq 0. \] Therefore, the existence of a Lyapunov function guarantees any system trajectory will converge to the origin.

The nice property of constructing such a Lyapunov function is that it goes beyond linear systems and can be similarly generalized to nonlinear systems, where one can compute a stability certificate from semidefinite optimization. See for example this lecture notes.

Control Design. Consider now the case where \(A\) is not stable, but we can use a linear feedback controller to stabilize the system, i.e., \[ u_k = K x_k, \quad x_{k+1} = A x_k + B u_k = (A+ BK) x_k, \] where the matrix \(K \in \mathbb{R}^{m \times n}\) is the feedback law we wish to design. We wish to design \(K\) using convex optimization, in particular semidefinite optimization.

Involving Lemma~2.6, we see that designing \(K\) to stabilize \(A + BK\) is equivalent to finding \[\begin{equation} P \succ 0, \quad (A + BK)^\top P (A + BK) - P \prec 0. \tag{2.34} \end{equation}\] Using the Schur complement technique in Proposition 2.1, we know (2.34) is equivalent to \[ \begin{bmatrix} P & (A + BK)^\top P \\ P(A+BK) & P \end{bmatrix} \succ 0. \] This matrix inequality is, however, not jointly convex in the unknowns \((P,A)\). To address this issue, we can pre-multiply and post-multiply the equation by \(\mathrm{BlkDiag}(P^{-1}, P^{-1})\), which leads to \[\begin{equation} \begin{split} \begin{bmatrix} P^{-1}& \\ & P^{-1}\end{bmatrix} \begin{bmatrix} P & (A + BK)^\top P \\ P(A+BK) & P \end{bmatrix} \begin{bmatrix} P^{-1}& \\ & P^{-1}\end{bmatrix} \succ 0 \Leftrightarrow \\ \begin{bmatrix} P^{-1}& P^{-1}(A + BK)^\top\\ (A+BK)P^{-1}& P^{-1}\end{bmatrix} \succ 0. \end{split} \end{equation}\] Now with a change of variable \(Q:= P^{-1}\), \(Y = K P^{-1}\), we have \[ \begin{bmatrix} Q & Q A^\top+ Y^\top B^\top\\ A Q + BY & Q \end{bmatrix} \succ 0, \] which is indeed a linear matrix inequality in the unknowns \((Q,Y)\). Solving the LMI, we can recover \[ P = Q^{-1}, K = Y Q^{-1}. \]

2.5.2 Linear Quadratic Regulator

Another (somewhat surprising) application of semidefinite optimization is that it can be used to convexify the direct optimization of the linear quadratic regulator problem (one of the cornerstones in modern optimal control). In fact, such a convex formulation is the key to prove why policy optimization works in reinforcement learning, as least in the linear quadratic case (Hu et al. 2023) (Mohammadi et al. 2021).

Consider a continuous-time linear dynamical system \[\begin{equation} \dot{x} = A x + B u, \quad x \in \mathbb{R}^{n}, u \in \mathbb{R}^{m}, A \in \mathbb{R}^{n \times n}, B \in \mathbb{R}^{n \times m}, \tag{2.35} \end{equation}\] where \(x\) denotes the state and \(u\) denotes the control. Consider the following optimal control problem \[\begin{equation} \begin{split} \min_{u(t)} & \quad \mathbb{E} \left\{ \int_{t=0}^{\infty} x(t)^\top Q x(t) + u(t)^\top R u(t) dt \right\} \\ \mathrm{s.t.}& \quad \dot{x} = A x + Bu, \quad x(0) \sim \mathcal{N}(0,\Omega) \end{split} \tag{2.36} \end{equation}\] where \(Q,R\succ 0\) are known cost matrices, and \(x(0)\) is supposed to satisfy the zero-mean Gaussian distribution with covariance \(\Omega \succ 0\). In the case where \(A,B,Q,R\) are perfectly known to the control designer, and \((A,B)\) is controllable (to be defined soon), problem (2.36) can be solved exactly and optimally, with the optimal controller being a linear feedback controller of the form \[ u(t) = -Kx(t), \] where \[ K = R^{-1}B^\top S, \] with \(S\) the unique positive definite solution to the continuous-time algebraic Riccati equation (ARE) \[ SA + A^\top S - SBR^{-1}B^\top S + Q = 0. \]

Policy Optimization. The control and reinforcement learning community are interested in whether it is possible to directly find the optimal feedback policy \(K\), i.e., directly solving \[\begin{equation} \begin{split} \min_{K \in \mathcal{S}} & \quad \mathbb{E} \left\{ \int_{t=0}^{\infty} x(t)^\top Q x(t) + u(t)^\top R u(t) dt \right\} \\ \mathrm{s.t.}& \quad \dot{x} = A x + Bu, \quad u = -Kx, \quad x(0) \sim \mathcal{N}(0,\Omega). \end{split} \tag{2.37} \end{equation}\] Note that problem (2.37) is different from the original problem (2.36) in the sense that we assume the knowledge that the optimal controller is linear and directly optimize \(K\). The feasible set \(\mathcal{S}\) contains the set of stabilizing controllers, i.e., \[\begin{equation} \mathcal{S}= \{ K \in \mathbb{R}^{m \times n} \mid A - BK \text{ is Hurwitz} \}, \tag{2.38} \end{equation}\] where a matrix \(A\) is Hurwitz means \(\mathrm{Re}(\lambda_i(A)) < 0\) for all \(i=1,\dots,n\), i.e., all the eigenvalues of \(A\) have strictly negative real parts. When \(A - BK\) is Hurwitz, the closed-loop system is stable and the integral in (2.37) will not blow up (so it is sufficient to consider the class of stabilizing controllers).

We will first observe that the direct optimization (2.37) is nonconvex, because the feasible set \(\mathcal{S}\) is nonconvex. The following example is adapted from (Fazel et al. 2018).

Example 2.6 (Nonconvex Set of Stabilizing Controllers) Consider the dynamical system (2.35) with \[ A = 0_{3 \times 3}, \quad B = \mathrm{I}_3. \] It is easy to show that both \[ K_1 = \begin{bmatrix} 1 & 0 & -10 \\ -1 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix}, \quad K_2 = \begin{bmatrix} 1 & -10 & 0 \\ 0 & 1 & 0 \\ -1 & 0 & 1 \end{bmatrix} \] are stabilizing controllers, as \[ \lambda(A - BK_1) = (-1,-1,-1), \quad \lambda(A - BK_2) = (-1,-1,-1). \] However, pick \[ K = \frac{K_1 + K_2}{2} = \begin{bmatrix} 1 & -5 & -5 \\ -0.5 & 1 & 0 \\ -0.5 & 0 & 1 \end{bmatrix}, \] we have \[ \lambda(A - BK) = (-3.2361, 1.2361, -1) \] and \(K\) does not stabilize the closed-loop system.

Convex Parameterization with Semidefinite Optimization. We will now show that it is possible to reparameterize problem (2.37) as a semidefinite optimization so it becomes convex. To do so, we note \[ x^\top Q x + u^\top R u = x^\top Q x + x^\top K^\top R K x = x^\top(Q + K^\top R K)x^\top= \mathrm{tr}((Q + K^\top R K)xx^\top), \] and therefore the objective of (2.37) can be written as \[\begin{equation} \mathbb{E} \left\{ \int_{0}^{\infty} \mathrm{tr}((Q + K^\top R K) xx^\top) dt \right\} = \mathrm{tr}\left( (Q + K^\top R K) \mathbb{E} \left\{ \int_{0}^{\infty} xx^\top dt \right\} \right). \end{equation}\] For any given initial condition, the solution of the closed-loop dynamics is given by the matrix exponential \[ x(t) = e^{(A-BK)t} x(0). \] For the distribution of initial conditions, we have \[ \mathbb{E} \left\{ x(t)x(t)^\top \right\} = e^{(A-BK)t} \mathbb{E}\{ x(0)x(0)^\top \} e^{(A-BK)^\top t} = e^{(A-BK)t} \Omega e^{(A-BK)^\top t}. \] The integral of this function, call it \(X\), represents the expected energy of the closed-loop response \[ X = \mathbb{E} \left\{ \int_{0}^{\infty} xx^\top dt \right\} . \] For every \(K \in \mathcal{S}\) that is stabilizing, \(X\) can be computed as the solution to the Lyapunov equation \[\begin{equation} (A - BK) X + X(A - BK)^\top+ \Omega = 0. \tag{2.39} \end{equation}\] As a result, the objective of (2.37) is simplied as \[ \mathrm{tr}((Q + K^\top R K) X), \] with \(X\) solves (2.39). We arrive at an optimization problem \[\begin{equation} \begin{split} \min_{X,K} & \quad \mathrm{tr}(QX) + \mathrm{tr}(K^\top R K X) \\ \mathrm{s.t.}& \quad (A-BK) X + X (A - BK)^\top+ \Omega = 0 \\ & \quad X \succ 0 \end{split}, \tag{2.40} \end{equation}\] which is still nonconvex. We do a change of variable \(Y = KX\), so \(K = Y X ^{-1}\) and obtain \[\begin{equation} \begin{split} \min_{X,Y} & \quad \mathrm{tr}(QX) + \mathrm{tr}(X^{-1}Y^\top R Y) \\ \mathrm{s.t.}& \quad AX - BY + XA^\top- Y^\top B^\top+ \Omega = 0 \\ & \quad X \succ 0 \end{split}. \tag{2.41} \end{equation}\] The second term in the objective is still nonconvex, but we can use the Schur complement technique again, and arrive at the final optimization \[\begin{equation} \begin{split} \min_{X,Y,Z} & \quad \mathrm{tr}(QX) + \mathrm{tr}(Z) \\ \mathrm{s.t.}& \quad AX - BY + XA^\top- Y^\top B^\top+ \Omega = 0 \\ & \quad X \succ 0 \\ & \quad \begin{bmatrix} Z & R^{1/2} Y & \\ Y^\top R^{1/2} & X \end{bmatrix} \succeq 0 \end{split}. \tag{2.42} \end{equation}\] Note that the last two matrix inequalities of (2.42) implies, by Schur complement \[ Z - R^{1/2}Y X^{-1}Y^\top R^{1/2} \succeq 0 \Rightarrow \mathrm{tr}(Z - R^{1/2}Y X^{-1}Y^\top R^{1/2}) \geq 0. \] Since the optimization is trying to minimize \(\mathrm{tr}(Z)\), it will push the equality to hold \[ Z - R^{1/2}Y X^{-1}Y^\top R^{1/2} = 0, \] and thus \[ \mathrm{tr}(Z) = \mathrm{tr}(R^{1/2}Y X^{-1}Y^\top R^{1/2}) = \mathrm{tr}(X^{-1}Y^\top R Y). \]

Example 2.7 (Convex LQR) For the same linear system as in Example 2.6, let \(Q = R = \mathrm{I}_3\).

If we solve the optimal feedback controller \(K\) by solving the ARE, we get the optimal controller is \(K^\star = \mathrm{I}_3\).

If we implement the SDP in (2.42), we also get \(K^\star = \mathrm{I}_3\), confirming the correctness of the convex parameterization. The implementation is shown below and can be found here.

%% Show the feasible set is nonconvex

A = zeros(3,3);

B = eye(3);

K1 = [1, 0, -10; -1, 1, 0; 0, 0, 1];

K2 = [1, -10, 0; 0, 1, 0; -1, 0, 1];

K = (K1 + K2)/2;

%% get the groundtruth LQR controller

Q = eye(3); R = eye(3);

K_lqr = lqr(A,B,Q,R,zeros(3,3));

Omega = eye(3);

%% solve SDP

addpath(genpath('../YALMIP'))

addpath(genpath('../../../mosek'))

X = sdpvar(3,3);

Y = sdpvar(3,3,'full');

Z = sdpvar(3,3);

M = [Z, R*Y; Y'*R, X];

F = [

A*X - B*Y + X*A' - Y'*B' + Omega == 0;

M >= 0

];

obj = trace(Q*X) + trace(Z);

optimize(F,obj);

K_sdp = value(Y)*inv(value(X));