Chapter 5 Stability Analysis

Optimal control formulates a control problem via the language of mathematical optimization. However, there are control problems, and sometimes even the very basic control problems, that cannot be easily stated in the optimal control formulation.

For example, suppose our goal is to swing up a pendulum to the upright position and stabilize it there. You may want to formalize the problem as \[\begin{equation} \min_{u(t) \in \mathbb{U}} \int_{0}^{\infty} \Vert x(t) - x_d \Vert^2 dt, \quad \text{subject to} \quad \dot{x} = f(x,u), x(0) = x_0, \tag{5.1} \end{equation}\] where \(x_d\) is the desired upright position for the pendulum. However, does the solution of problem (5.1), if exists, guarantee the stabilization of the pendulum at the upright position? The answer is unclear without a rigorous proof.

However, after a slight change of perspective, the optimal control problem may be formulated to better match the goal. Suppose there exists a region, \(\Omega\), in the state space such that as long as the pendulum enters \(\Omega\), there always exists a sequence of control to bring the pendulum to the goal state \(x_d\), then we can simply formulate a different optimal control problem \[\begin{equation} \min_{u(t) \in \mathbb{U}} \int_{0}^{T} \Vert u(t) \Vert^2 dt, \quad \text{subject to} \quad x(0)=x_0, x(T) \in \Omega, \dot{x} = f(x,u), \tag{5.2} \end{equation}\] where now it is very clear, if a solution exists to problem (5.2), then we will definitely achieve our goal. This is because the constraint \(x(T) \in \Omega\) guarantees that we will be able to stabilize the pendulum, and the cost function of (5.2) simply encourages minimum control effort along the way.

This highlights that, sometimes the formulation of a problem may deserve more thoughts than the actual solution. Of course the formulation (5.2) may be much more difficult to solve. In fact, does the set \(\Omega\) exist, and if so, how to describe it?

This is the main focus of this chapter: to introduce tools that can help us analyze the stability of uncontrolled and controlled nonlinear systems. Specifically, we will introduce the notion of stability certificates, which are conditions that, if hold, certify the stability of the system (e.g., in the set \(\Omega\)). Interestingly, you will see that the notion of stability certificates is intuitive and easy, but what is really challenging is to find and compute the stability certificates. We will highlight the power and also limitation of computational tools, especially those that are based on convex optimization (see Appendix B for a review of convex optimization).

5.1 Autonomous Systems

Let us first focus on autonomous systems, i.e., systems whose dynamics do not depent on time (and control). We introduce different concepts of stability and ways to certify them.

5.1.1 Concepts of Stability

Consider the autonomous system \[\begin{equation} \dot{x} = f(x) \tag{5.3} \end{equation}\] where \(x \in \mathbb{X} \subseteq \mathbb{R}^n\) is the state and \(f: \mathbb{R}^n \rightarrow \mathbb{R}^n\) is the (potentially nonlinear) dynamics.

Before talking about concepts of stability, we need to define an equilibrium point.

Definition 5.1 (Equilibrium Point) A state \(x^\star\) is called an equilibrium point of system (5.3) if \(f(x^\star) = 0\), i.e., once the system reaches \(x^\star\), it stays at \(x^\star\).

For example, a linear system \[ \dot{x} = A x \] has a single equilibrium point \(x^\star = 0\) when \(A\) is nonsingular, and an infinite number of equilibrium points when \(A\) is singular (those equilibrium points lie in the kernel of matrix \(A\)).

When analyzing the behavior of a dynamical system around the equilibrium point, it is often helpful to “shift” the dynamics equation so that \(0\) is the equilibrium point. For example, if we are interested in the behavior of system (5.3) near the equilibrium point \(x^\star\), we can create a new variable \[ z = x - x^\star, \] so that \[\begin{equation} \dot{z} = \dot{x} = f(x) = f(z + x^\star). \tag{5.4} \end{equation}\] Clearly, \(z^\star = 0\) is an equilibrium point for the shifted system (5.4).

Let us find the equilibrium points of a simple pendulum.

Example 5.1 (Equilibrium Points of A Simple Pendulum) Consider the dynamics of an uncontrolled pendulum

\[\begin{equation} \begin{cases} \dot{\theta} = \dot{\theta} \\ \ddot{\theta} = - \frac{1}{ml^2} (b \dot{\theta} + mgl \sin \theta) \end{cases} \tag{5.5} \end{equation}\] where \(\theta\) is the angle between the pendulum and the vertical line, and \(x = [\theta,\dot{\theta}]^T\) is the state of the pendulum (\(m,g,l,b\) denote the mass, gravity constant, length, and damping constant, respectively).

To find the equilibrium points of the pendulum, we need the right hand sides of (5.5) to be equal to zero: \[ \dot{\theta} = 0, \quad - \frac{1}{ml^2} (b \dot{\theta} + mgl \sin \theta) = 0. \] The solutions are easy to find \[ x^\star = \begin{bmatrix} 0 \\ 0 \end{bmatrix} \quad \text{or} \quad \begin{bmatrix} \pi \\ 0 \end{bmatrix}, \] corresponding to the bottomright and upright positions of the pendulum, respectively.

The pendulum dynamics has two equilibrium points, but our physics intuition tells us these two equilibrium points are dramatically different. Specifically, the bottomright equilibrium \(x^\star = [0,0]^T\) is such that if you perturb the pendulum around the equilibrium, the pendulum will go back to that equilibrium; the upright equilibrium \(x^\star = [\pi,0]^T\) is such that if you perturb the pendulum (even just a little bit) around the equilibrium, it will diverge from that equilibrium.

This physical intuition is exactly what we want to formalize as the concepts of stability.

In the following, we focus on the nonlinear autonomous system (5.3) with \(f(0) = 0\), i.e., \(x^\star = 0\) is an equilibrium point. We now formally define the different concepts of stability.

Definition 5.2 (Lyapunov Stability) The equilibrium point \(x=0\) is said to be stable in the sense of Lyapunov if, for any \(R > 0\), there exists \(r >0\) such that if \(\Vert x(0) \Vert < r\), then \(\Vert x(t) \Vert < R\) for all \(t \geq 0\). Otherwise, the equilibrium point is unstable.

For a system that is Lyapunov stable around \(x=0\), the definition says that, if we want to constrain the trajectory of the system to be within the ball \(B_R = \{ x \mid \Vert x \Vert < R \}\), then we can always find a smaller ball \(B_r = \{ x \mid \Vert x \Vert < r \}\) such that if the system starts within \(B_r\), it will remain in the larger ball \(B_R\).

On the other hand, if the system is not Lyapunov stable at \(x=0\), then there exists at least one ball \(B_R\), such that no matter how close the system’s initial condition is to the origin, it will eventually exit the ball \(B_R\). The following exercise is left for you to verify the instability of the Van der Pol oscillator.

Exercise 5.1 (Instability of the Van der Pol oscillator) Show that the Van der Pol oscillator \[ \begin{cases} \dot{x}_1 = x_2 \\ \dot{x}_2 = - x_1 + (1-x_1^2) x_2 \end{cases} \] is unstable at the equilibrium point \(x = 0\).

Lyapunov stability does not guarantee the system trajectory will actually converge to \(x =0\). Instead, asymptotic stability will ask the system trajectory to converge to \(x=0\).

Definition 5.3 (Asymptotic Stability and Domain of Attraction) The equilibrium point \(x = 0\) is said to be asymptotically stable if (i) it is Lyapunov stable, and (ii) there exists some \(r > 0\) such that \(x(0) \in B_r\) implies \(x(t) \rightarrow 0\) as \(t \rightarrow \infty\).

The domain of attraction (for the equilibrium \(x=0\)) is the largest set of points in the state space such that trajectories initiated at those points will converge to the equilibrium point. That is, \[ \Omega(x^\star) = \{ x \in \mathbb{X} \mid x(0) = x \Longrightarrow \lim_{t \rightarrow \infty} x(t) = x^\star \}. \] The ball \(B_r\) is a domain of attraction for the equilibrium point \(x=0\), but not necessarily the largest domain of attraction.

You may immediately realize that in the definition of asymptotic stability, we require Lyapunov stability to hold first. Is this necessary? i.e., does there exist a system where trajectories eventually converge to zero, but is not stable in the sense of Lyapunov? You should work out the following exercise.

Exercise 5.2 (Vinograd System) Show that for the Vinograd dynamical system (Vinograd 1957) \[ \begin{cases} \dot{x} = \frac{x^2(y-x) + y^5}{(x^2+y^2)(1 + (x^2+y^2)^2)} \\ \dot{y} = \frac{y^2 (y - 2x)}{(x^2+y^2)(1 + (x^2+y^2)^2)} \end{cases}, \] all system trajectories converge to the equilibrium point \((x,y) = 0\), but the equilibrium point is not stable in the sense of Lyapunov.

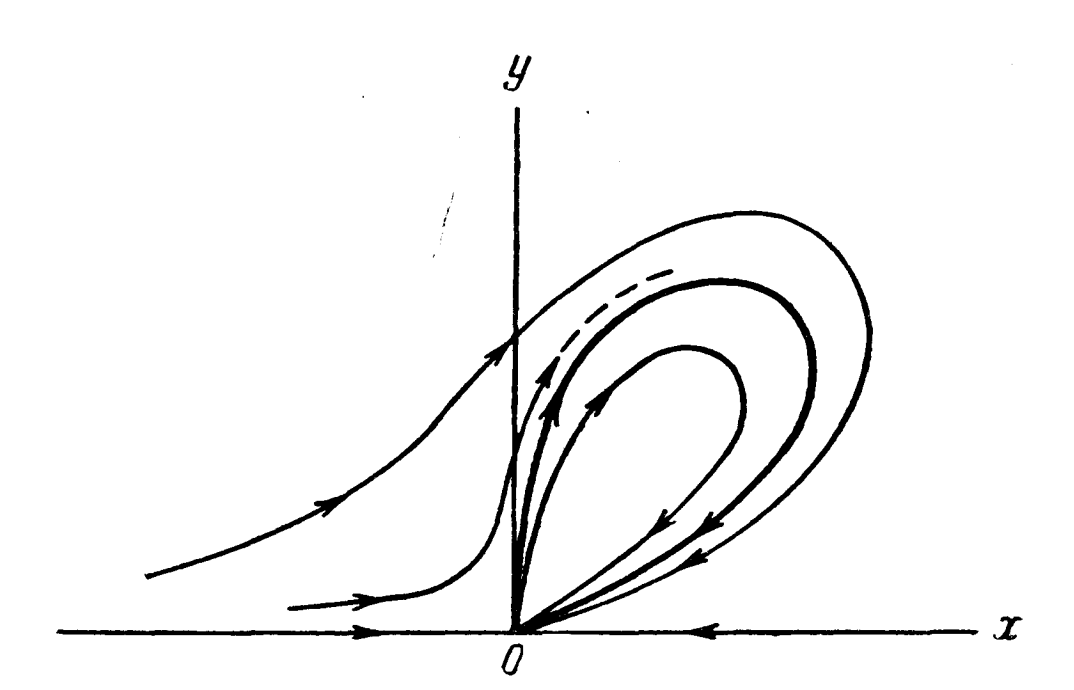

(Hint: the system trajectories will behave like the following plot.)

Figure 5.1: Trajectories of the Vinograd system. Copied from the original article of Vinograd.

In many cases, we want the convergence of the system trajectory towards \(x=0\) to be fast, thus bringing in the notion of exponential stability.

Definition 5.4 (Exponential Stability) An equilibrium point \(x=0\) is said to be exponentially stable, if there exists a ball \(B_r\) such that as long as as \(x(0) \in B_r\), then \[ \Vert x(t) \Vert \leq \alpha \Vert x(0) \Vert e^{-\lambda t}, \quad \forall t, \] for some \(\alpha > 0\) and \(\lambda > 0\) (\(\lambda\) is called the rate of exponential convergence).

Exponential stability implies asymptotic stability (and certainly also Lyapunov stability). What is nice about exponential stability is that we can quantify the distance of the system trajectory to the equilibrium point as a function of time (as long as we know the constants \(\alpha, \Vert x(0) \Vert, \lambda\)). In many safety-critical applications, we need such performance guarantees. For example, in Chapter 6.5, we will see the application of exponential stability in observer-feedback control.

All the concepts of stability we have mentioned so far only talk about the stability of the system locally around the equilibrium point \(x=0\) (via arguments like \(B_r\) and \(B_R\)). It would be much nicer if we can guarantee stability of the system globally, i.e., no matter where the system starts in the state space \(\mathbb{X}\), its trajectoy will converge to \(x=0\).

Definition 5.5 (Global Asymptotic and Exponential Stability) The equilibrium point \(x = 0\) is said to be globally asymptotically (exponentially) stable if asymptotic (exponential) stability holds for any initial states. That is, \[ \forall x \in \mathbb{X}, \quad x(0) = x \Longrightarrow \begin{cases} \lim_{t \rightarrow \infty} x(t) = 0 & \text{global asymptotic stability} \\ \exists \alpha, \lambda > 0, \text{ s.t. } \Vert x(t) \Vert \leq \alpha \Vert x(0) \Vert e^{-\lambda t} & \text{global exponential stability} \end{cases} \]

This concludes our definitions of stability for nonlinear systems (Definition 5.2-5.5). It is worth mentioning that the concepts of stability are complicated (refined) here due to our focus on nonlinear systems. For linear systems, the concepts of stability are simpler. Specifically, all local stability properties of linear systems are also global and asymptotic stability is equal to exponential stability. In fact, for a linear time-invariant system \(\dot{x} = Ax\), it is either asymptotically (exponentially) stable, or marginally stable, or unstable. Moreover, we can fully characterize the stability property by inspecting the eigenvalues of \(A\) (you can find a refreshment of this in Appendix C.1).

How do we characterize the stability property of a nonlinear system? If someone gave me a nonlinear system (5.3), how can I provide a certificate to her that the system is stable or unstable (I cannot use eigenvalues anymore in this case)? Let us describe some of these certificates below.

5.1.2 Stability by Linearization

A natural idea is to linearize, if possible, the nonlinear system (5.3) at a given equilibrium point \(x^\star\) and inspect the stability of the linearized system (for which we can compute eigenvalues). Therefore, the key question here is how does the stability and instability of the linearized system relate to the stability and instability of the original nonlinear system.

Theorem 5.1 (Stability by Linearization) Assume \(x=0\) is an equilibrium point of system (5.3) and \(f\) is continuously differentiable. Let \[\begin{equation} \dot{x} = Ax, \quad A = \frac{\partial f}{\partial x} \Big\vert_{x=0} \tag{5.6} \end{equation}\] be the linearized system at \(x=0\). The following statements are true about the stability relationship between (5.3) and (5.6).

If the linearized system (5.6) is strictly stable (i.e., all eigenvalues of \(A\) have strictly negative real parts), then the original system (5.3) is asymptotically stable at \(x=0\).

If the linearized system (5.6) is unstable (i.e., at least one eigenvalue of \(A\) has strictly positive real part), then the original system (5.3) is unstable at \(x=0\).

If the linearized system (5.6) is marginally stable (i.e., all eigenvalues of \(A\) have nonpositive real parts, and at least one eigenvalue has zero real part), then the stability of the original system (5.3) at \(x=0\) is indeterminate.

Theorem 5.1 is actually quite useful when we want to quickly examine the local stability of a nonlinear system around a given equilibrium point, as we will show in the next example.

Example 5.2 (Stability of A Simple Pendulum by Linearization) Consider the simple pendulum dynamics (5.5) in Example 5.1. Without loss of generality, let \(m=1,l=1,b=0.1\). The Jacobian of the nonlinear dynamics reads \[ A = \frac{\partial f}{\partial x} = \begin{bmatrix} 0 & 1 \\ -\frac{g}{l} \cos \theta & -\frac{b}{ml^2} \end{bmatrix}. \]

At the bottomright equilibrium point \(\theta =0, \dot{\theta} = 0\), the matrix \(A\) has two eigenvalues \[ -0.0500 \pm 3.13i, \] and hence the pendulum is asymptotically stable at the bottomright equilibrium point.

At the upright equilibrium point \(\theta =\pi, \dot{\theta} = 0\), the matrix \(A\) has two eigenvalues \[ 3.08, \quad -3.18, \] and hence the pendulum is unstable at the upright equilibrium point.

The linearization method is easy to carry out. However, it tells us nothing about global stability or exponential stability. Moreover, when the linearized system is marginally stable, the stability of the orignal system is inconclusive. In the next, we will introduce a more general, and perhaps the most popular framework for analyzing the stability of nonliear systems.

5.1.3 Lyapunov Analysis

The basic idea of Lyapunov analysis is quite intuitive: if can find an “energy-like” scalar function for a system such that the scalar function is zero at an equilibrium point and positive everywhere else, and the time-derivative of the scalar function is zero at the equilibrium point but negative otherwise, then we know that the energy of the system will eventually converge to zero, and hence the state trajectory will converge to the equilibrium point. Lyapunov analysis was originally inspired by the energy function of a mechanical system: the total energy of a mechanical system (potental energy plus kinetic energy) will settle down to its minimum value if it is constantly dissipated (e.g., due to damping). However, the concept of a Lyapunov function is much broader than the energy function, i.e., it can be an arbitrary abstract function without any physical meaning.

Let us now introduce the concept of a Lyapunov function.

Definition 5.6 (Positive Definite Function) A scalar function \(V(x)\) is said to be locally positive definite in a ball \(B_R\) if \[ V(0) = 0 \quad \text{and} \quad V(x) > 0, \forall x \in B_R \backslash \{0\}, \] and globally positive definite if \[ V(0) = 0 \quad \text{and} \quad V(x) > 0, \forall x \in \mathbb{X} \backslash \{0\}, \] where \(\mathbb{X}\) is the entire state space.

A function \(V(x)\) is said to be negative definite if \(-V(x)\) is positive definite.

A function \(V(x)\) is said to be positive semidefinite if the “\(>\)” sign is replaced by the “\(\geq\)” sign in the above equations.

A function \(V(x)\) is said to be negative semidefinite if \(-V(x)\) is positive semidefinite.

For example, when \(\mathbb{X} = \mathbb{R}^2\), the function \(V(x) = x_1^2 + x_2^2\) is positive definite, but the function \(V(x) = x_1^2\) is only positive semidefinite.

Definition 5.7 (Lyapunov Function) In the ball \(B_R\), if a function \(V(x)\) is positive definite, and its time derivative along any system trajectory \[ \dot{V}(x) = \frac{\partial V}{\partial x} f(x) \] is negative semidefinite (we assume the partial derivative \(\frac{\partial f}{\partial x}\) exists and is continuous), then \(V(x)\) is said to be a Lyapunov function for system (5.3). Note that \(\dot{V}(x^\star) = 0\) at any equilibrium point \(x^\star\) by definition.

With the introduction of positive definite and Lyapunov functions, we are now ready to use them to certify different concepts of stability.

Theorem 5.2 (Lyapunov Local Stability) Consider the nonlinear system (5.3) in a ball \(B_R\) with equilibrium point \(x=0\), if there exists a scalar function \(V(x)\) (with continuous partial derivatives) such that

\(V(x)\) is positive definite (in \(B_R\))

\(\dot{V}(x)\) is negative semidefinite (in \(B_R\))

then the equilibrium point \(x=0\) is stable in the sense of Lyapunov (cf. Definition 5.2).

Moreover,

Let us apply Theorem 5.2 to the simple pendulum.

Example 5.3 (Lyapunov Local Stability for A Simple Pendulum) Consider the pendulum dynamics (5.5). The total energy of a pendulum is \[\begin{equation} V(x) = \frac{1}{2} ml^2 \dot{\theta}^2 + mgl (1 - \cos \theta). \tag{5.7} \end{equation}\] Clearly, \(V(x)\) is positive definite on the entire state space, and the only point where \(V(x) = 0\) is the equilibrium point \(\theta = 0, \dot{\theta} = 0\).

Let us compute the time derivative of \(V(x)\): \[ \dot{V}(x) = ml^2 \dot{\theta} \ddot{\theta} + mgl \sin \theta \dot{\theta} = ml^2 \dot{\theta} \left( -\frac{1}{ml^2}(b \dot{\theta} + mgl \sin\theta) \right) + mgl \sin \theta \dot{\theta} = -b \dot{\theta}^2 , \] which is clearly negative semidefinite. In fact, \(\dot{V}(x)\) is precisely the energy dissipation rate due to damping. By Theorem 5.2 we conclude that the equilibrium point is stable in the sense of Lyapunov.

Note that with this choice of \(V(x)\) as in (5.7), we actually cannot certify asymptotic local stability of the bottomright equilibrium point. So a natural question is, can we find a better Lyapunov function that indeed certifies asymptotic stability?

The answer is yes. Consider a different Lyapunov function \[\begin{equation} \tilde{V}(x) = \frac{1}{2} ml^2 \dot{\theta}^2 + \frac{1}{2} ml^2 \left( \frac{b}{ml^2}\theta + \dot{\theta} \right)^2 + 2mgl (1 - \cos \theta), \tag{5.8} \end{equation}\] which is positive definite and admits a single zero-value point \(\theta = 0, \dot{\theta} = 0\) that is also the bottomright equilibrium point. Simplifying \(\tilde{V}(x)\) we can get \[\begin{align} \tilde{V}(x) &= ml^2 \dot{\theta}^2 + 2mgl(1-\cos \theta) + \frac{1}{2} ml^2 \left( \frac{b^2}{m^2 l^4} \theta^2 + \frac{2b}{ml^2} \theta \dot{\theta} \right) \\ &= 2V(x) + \frac{1}{2} ml^2 \left( \frac{b^2}{m^2 l^4} \theta^2 + \frac{2b}{ml^2} \theta \dot{\theta} \right). \end{align}\]

The time derivative of the new function \(\tilde{V}(x)\) is \[\begin{align} \dot{\tilde{V}}(x) &= 2 \dot{V}(x) + \frac{ml^2}{2} \left( \frac{2b^2}{m^2 l^4} \theta \dot{\theta} + \frac{2b}{ml^2} (\dot{\theta}^2 + \theta \ddot{\theta}) \right) \\ & = 2\dot{V}(x) + b\dot{\theta}^2 + \left( \frac{b^2}{ml^2} \theta\dot{\theta} + b \theta \left( -\frac{1}{ml^2} (b\dot{\theta} + mgl \sin \theta) \right) \right) \\ & = -b \left( \dot{\theta}^2 + \frac{g}{l} \theta \sin \theta \right). \end{align}\] \(\dot{\tilde{V}}(x)\) is negative definite locally around the equilibrium point (locally \(\sin\theta \approx \theta\)). Therefore, with the new Lyapunov function \(\tilde{V}(x)\) we can certify asymptotic stability.

Interestingly, \(V(x)\) is intuitive (the total energy of the pendulum system), but it fails to certify asymptotic local stability (as least by just using Theorem 5.2). \(\tilde{V}(x)\) does not have any physical intuition, but it successfully certifies local asymptotic stability.

In Section 5.1.4, we will see that when using \(V(x)\) with the invariant set theorem, we can actually still certify the asymptotic stability of the pendulum around the bottomright equilibrium.

In many applications, we desire to certify the global stability of an equilibrium point. The following theorem states that if in addition the scalar function \(V(x)\) is radially unbounded, then global stability can be certified.

Theorem 5.3 (Lyapunov Global Stability) For the autonomous system (5.3), suppose there exists a scalar function \(V(x)\) with (continuous partial derivatives) such that

\(V(x)\) is positive definite;

\(\dot{V}(x)\) is negative definite;

\(V(x) \rightarrow \infty\) as \(\Vert x \Vert \rightarrow \infty\),

then the equilibrium point \(x = 0\) is globally asymptotically stable (cf. Definition 5.5).

Moreover, if in addition to the three conditions above

- \(\dot{V}(x) \leq - \alpha V(x)\) for some \(\alpha > 0\), then the equilibrium point is globally exponentially stable.

5.1.4 Invariant Set Theorem

Through Theorem 5.2, Theorem 5.3, and Example 5.3, we see that in order to certify asymptotic stability, the time derivative \(\dot{V}(x)\) is required to be positive definite. However, in many cases, with Example 5.3 being a typical one, \(\dot{V}(x)\) is only negative semidefinite, which makes it difficult to certify asymptotic stability.

In this section, we will introduce the invariant set theorem that can help us reason about asymptotic stability even when \(\dot{V}(x)\) is only negative semidefinite.

Let us first introduce the notion of an invariant set.

Definition 5.8 (Invariant Set) A set \(G\) is an invariant set for a dynamical system (5.3) if every system trajectory that starts within \(G\) remains in \(G\) for all future time. Formally, \[ x(0) \in G \Longrightarrow x(t) \in G,\forall t. \]

A trivial invariant set is the entire state space \(\mathbb{X}\). Another example of an invariant set is the singleton \(\{x^\star \}\) with \(x^\star\) being an equilibrium point. A nontrivial invariant set is the domain of attraction of an equilibrium point (cf. Definition 5.3).

We now state the local invariant set theorem.

Theorem 5.4 (Local Invariant Set) Consider the autonomous system (5.3), and let \(V(x)\) be a scalar function with continuous partial derivatives. Assume that

the sublevel set \(\Omega_{\rho} = \{ x \in \mathbb{X} \mid V(x) < \rho \}\) is bounded for some \(\rho > 0\), and

\(\dot{V}(x) \leq 0\) for all \(x \in \Omega_{\rho}\).

Let \(\mathcal{R}\) be the set of all points within \(\Omega_{\rho}\) such that \(\dot{V}(x) = 0\), and \(\mathcal{M}\) be the largest invariant set in \(\mathcal{R}\). Then, every trajectory that starts in \(\Omega_{\rho}\) will converge to \(\mathcal{M}\) as \(t \rightarrow \infty\).

With this theorem, we can now revisit the pendulum example 5.3.

Example 5.4 (Revisiting the Local Stability of A Simple Pendulum) In Example 5.3, using the Lyapunov function \[ V(x) = \frac{1}{2} ml^2 \dot{\theta}^2 + mgl(1 - \cos\theta), \] with time derivative \[ \dot{V}(x) = -b\dot{\theta}^2, \] we were only able to verify the stability of the bottomright equilibrium point in the sense of Lyapunov.

Now let us use the invariant set theorem 5.4 to show the asymptotic stability of the bottomright equilibrium point.

First it is easy to see that the sublevel set of \(V(x)\) is bounded. For example, with \(\rho = \frac{1}{4} mgl\), \[\begin{align} V(x) < \frac{1}{4} mgl \Rightarrow \frac{1}{2} ml^2 \dot{\theta}^2 < \frac{1}{4} mgl \Rightarrow \dot{\theta}^2 < \frac{1}{2} \frac{g}{l} \\ V(x) < \frac{1}{4} mgl \Rightarrow mgl(1-\cos\theta) < \frac{1}{4} mgl \Rightarrow \cos\theta > \frac{3}{4} \Rightarrow \theta \in (-\arccos \frac{3}{4}, \arccos \frac{3}{4}). \end{align}\]

The set \(\mathcal{R}\), including all the points in \(\Omega_{\rho}\) such that \(\dot{V}(x) = 0\) is \[ \mathcal{R} = \{ x \in \Omega_{\rho} \mid \dot{\theta} = 0 \}. \] We now claim that the largest invariant set \(\mathcal{M}\) in \(\mathcal{R}\) is just the single equilibrium point \(x = [0,0]^T\). We can prove this by contradiction. Suppose there is a different point \(x' = [\theta,0]^T\) with \(\theta \neq 0\) also belonging to the invariant set \(\mathcal{M}\), then \[ \ddot{\theta} = -\frac{1}{ml^2} (b \dot{\theta} + mgl \sin \theta) = - \frac{g}{l} \sin\theta \neq 0, \] which means \(\dot{\theta}\) will immediately become nonzero, and hence the trajectory will exit \(\mathcal{R}\) and also \(\mathcal{M}\). So that point cannot belong to the invariant set.

Now by Theorem 5.4, we conclude the bottomright equilibrium point is asymptotically stable.

Note that through this analysis we also obtain \(\Omega_{\rho}\) as a domain of attraction for the bottomright equilibrium point.

Similarly, with the addition of the radial unboundedness of \(V(x)\), we have a global version of the invariant set theorem.

Theorem 5.5 (Global Invariant Set) For the autonomous system (5.3), let \(V(x)\) be a scalar function with continuous partial derivatives that satisfies

\(V(x) \rightarrow \infty\) as \(\Vert x \Vert \rightarrow \infty\), and

\(\dot{V}(x) \leq 0\) over the entire state space.

Let \(\mathcal{R} = \{ x\in \mathbb{X} \mid \dot{V}(x)= 0 \}\), and \(\mathcal{M}\) be the largest invariant set in \(\mathcal{R}\). Then all system trajectories asymptotically converge to \(\mathcal{M}\) as \(t \rightarrow \infty\).

5.1.5 Computing Lyapunov Certificates

All the Theorems we have stated so far (Theorems 5.2, 5.3, 5.4, and 5.5) are very general and powerful tools for certifying stability of nonlinear systems. However, the key requirement for applying the results is a Lyapunov function \(V(x)\) that verifies different types of nonnegativity constraints.

How to find these functions?

In Example 5.3, we have seen that physical intuition can help us find a good Lyapunov function (5.7). Nevertheless, it did not quite give us what we want in terms of asymptotic stability. Instead, a hand-crafted function (5.8) helped us certify local asymptotic stability.

Wouldn’t it be cool that we can design an algorithm to find the Lyapunov certificates for us?

A closer look at the Theorems 5.2, 5.3, 5.4, and 5.5 tells us the key property of a Lyapunov certificate is that it needs to satisfy the positivity (or negativity) constraint for all states inside a set. This is a nontrivial and difficult requirement, because even if we were given a function \(V(x)\), naively evaluting if \(V(x)\) is nonnegative inside a set requires enumeration over all the states in the set, which is impractical given that the set is continuous and has infinite number of states.5 When the dynamics (5.3) is linear, searching for Lyapunov functions is well understood and presented in Appendix C.1. However, when the dynamics is nonlinear, things can get very complicated.

In the next, I want to introduce a general framework for searching Lyapunov certificates for nonlinear systems that is based on convex optimization.

This framework, although having deep connections with many other disciplines such as algebraic geometry, theoretical computer science, and mathematical optimization, is based on a very simple intution that we all have since high school.

Example 5.5 (A Simple Example for Certifying Nonnegativity) Suppose I give you a polynomial of a single variable \(x \in \mathbb{R}\) \[ p(x) = x^2 + 2x + 1 \] and ask you if \(p(x) \geq 0\) for all \(x\). You would not hesitate to answer “yes”, because you know \[ p(x) = (x+1)^2 \] is the square of \(x+1\) and hence must be nonnegative.

Let me make it more challenging. Suppose I give you a different polynomial \[ p(x) = -x^4 + 2 x^2 + x + 1 \] and ask you if \(p(x)\) is nonnegative for any \(x \in [-1,1]\) (instead of any \(x \in \mathbb{R}\)). At first glance, it seems much harder to answer this question because (i) we have a constraint set \(x \in [-1,1]\), and (ii) the polynomial \(p(x)\) has a higher degree and it is not a polynomial that we are very famliar with (compared to \(p(x) = x^2 + 2x +1\)).

However, if I show you that \(p(x)\) can be written as \[\begin{equation} p(x) = -x^4 + 2 x^2 + 2x + 1 = (x+1)^2 + x^2 (1 - x^2), \tag{5.9} \end{equation}\] it becomes easy again to certify that \(p(x)\) is nonnegative for any \(x \in [-1,1]\). Why?

First notice that \((x+1)^2 \geq 0\) for any \(x \in \mathbb{R}\),

Then notice that \(1 - x^2 \geq 0\) for any \(x \in [-1,1]\), and \(x^2 \geq 0\) for any \(x\). Therefore, \(x^2 (1-x^2) \geq 0\) for any \(x \in [-1,1]\).

Combining the above two reasonings, it becomes clear \(p(x)\) is nonnegative for any \(x \in [-1,1]\).

What we have learned from this simple example is that

Given a polynomial \(p(x)\) and a constraint set \(x \in \mathcal{X} \subseteq \mathbb{R}^n\), if we can write \(p(x)\) as a sum of a finite number of products \[ p(x) = \sum_{i=1}^K \sigma_i(x) g_i(x) \] where \(\sigma_i(x)\) is a polynomial that we know is always nonnegative for any \(x \in \mathbb{R}^n\) (just like \((x+1)^2\) and \(x^2\) in (5.9)), and \(g_i(x)\) is a polynomial that we know is always nonnegative for any \(x\) in the constraint set \(\mathcal{X}\) (just like \(1-x^2\) for the set \([-1,1]\) in (5.9)), then we have a certificate that \(p(x) \geq 0\) for any \(x \in \mathcal{X}\).

With this simple intuition, let me now formalize the framework of sum of squares (SOS) certificates for proving nonnegativity (also known as positivstellensatz, or in short P-satz).

Positivstellensatz, Sum of Squares, and Convex Optimization

Basic Semialgebraic Set. Let \(x = [x_1,\dots,x_n] \in \mathbb{R}^n\) be a list of variables, we define a basic semialgebraic set as \[\begin{equation} \mathcal{X} = \{ x \in \mathbb{R}^{n} \mid p_i(x) = 0, i = 1,\dots,l_{\mathrm{eq}}; p_i(x) \geq 0, i=l_{\mathrm{eq}}+1,\dots,l_{\mathrm{eq}} + l_{\mathrm{ineq}} \} \tag{5.10} \end{equation}\] where \(p_i(x),i=1,\dots,l_{\mathrm{eq}}+l_{\mathrm{ineq}}\) are polynomial functions in \(x\). In other words, the set \(\mathcal{X}\) is a subset of \(\mathbb{R}^n\) that is defined by \(l_{\mathrm{eq}}\) equality constraints and \(l_{\mathrm{ineq}}\) inequality constraints.

Observe that a basic semialgebraic set can capture a lot of the common constraint sets, such as a unit sphere, a unit ball, and a box (try this for yourself).

Positivstellensatz. We are now given the same question as in Example 5.5. Suppose I give you another polynomial function \(p_0(x)\), how can you tell me if \(p_0(x)\) is nonnegative for any \(x\) in the basic semialgebraic set \(\mathcal{X}\)? That is, to verify if \[ p_0(x) \geq 0, \quad \forall x \in \mathcal{X}. \]

Formalizing the intution obtained from Example 5.5, you will say if someone can produce a decomposition of \(p_0(x)\) as \[\begin{equation} p_0(x) = \sigma_0(x) + \sum_{i=1}^{l_{\mathrm{ineq}}} \sigma_i(x) p_{i + l_{\mathrm{eq}}}(x) + \sum_{i=1}^{l_{\mathrm{eq}}} \lambda_i(x) p_{i}(x), \tag{5.11} \end{equation}\] where \(\sigma_0,\sigma_1,\dots,\sigma_{l_{\mathrm{ineq}}}\) are “some type of” polynomials that we know are always nonnegative (for any \(x \in \mathbb{R}^n\)), and \(\lambda_1,\dots,\lambda_{l_{\mathrm{eq}}}\) are arbitrary polynomials. Then I have a “certificate” that \(p_0(x) \geq 0\) for any \(x \in \mathcal{X}\).

Why? The reasoning is exactly the same as before.

\(\sigma_0(x) \geq 0\) for any \(x\),

\(\sigma_i(x) p_{i+l_{\mathrm{eq}}}(x) \geq 0, i=1,\dots,l_{\mathrm{ineq}}\) for any \(x \in \mathcal{X}\), because (a) \(\sigma_i(x) \geq 0\) for any \(x\), and (b) \(p_{i+l_{\mathrm{eq}}}(x) \geq 0\) for any \(x \in \mathcal{X}\) by definition of the basic semialgebraic set (5.10),

\(\lambda_i(x) p_i (x) = 0, i=1,\dots,l_{\mathrm{eq}}\) for any \(x \in \mathcal{X}\) by definition of the basic semialgebraic set (5.10).

We call \(\sigma_i\)’s “nonnegative polynomial multipliers”, and \(\lambda_i\)’s “polynomial multipliers”.

Sum-of-Squares. Now it comes the key question: what type of polynomials should we choose as the nonnegative polynomial multipliers? Ideally, this type of polynomials should

be always (trivially) nonnegative, and

have a nice representation for its unknown parameters (coefficients).

Looking back at our choice of multipliers, i.e., \((x+1)^2\) and \(x^2\) in Example 5.5, it is natural to come up with the choice of a “sum-of-squares” (SOS) polynomial.

Definition 5.9 (Sum-of-Squares Polynomial) A polynomial \(\sigma(x)\) is called an SOS polynomial if \[ \sigma(x) = \sum_{i=1}^k q_i^2(x), \] i.e., \(\sigma(x)\) can be written as a sum of \(k\) squared polynomials.

OK, an SOS polynomial is trivially nonnegative (satisfying requirement (a) above), but does it have a nice representation for its parameters? The following Lemma gives us an affirmative answer.

Lemma 5.1 (SOS Polynomial and Positive Semidefinite Matrix) A polynomial \(\sigma(x)\) is SOS if and only if \[ \sigma(x) = [x]_d^T Q [x]_d \] for some \(Q \succeq 0\), where \([x]_d\) is the vector of monomials in \(x\) of degree up to \(d\). For example, if \(x \in \mathbb{R}^2\) and \(d = 2\), then \[ [x]_2 = [1,x_1,x_2,x_1^2,x_1x_2,x_2^2]^T. \]

With the choice of \(\sigma(x)\) as SOS polynomials, we are now ready to explicitly search for a nonnegativity certificate in the form of (5.11): \[\begin{equation} \begin{split} \text{find} & \quad \{\sigma_i \}_{i=0}^{l_{\mathrm{ineq}}}, \{ \lambda_i \}_{i=1}^{l_{\mathrm{eq}}} \\ \text{subject to} & \quad p_0(x) = \sigma_0(x) + \sum_{i=1}^{l_{\mathrm{ineq}}} \sigma_i(x) p_{i + l_{\mathrm{eq}}}(x) + \sum_{i=1}^{l_{\mathrm{eq}}} \lambda_i(x) p_{i}(x), \\ & \quad \sigma_i \text{ is SOS}, i=0,\dots,l_{\mathrm{ineq}}, \\ & \quad \lambda_i \text{ is polynomial}, i=1,\dots,l_{\mathrm{eq}} \\ & \quad \mathrm{deg}(\sigma_0) \leq 2 \kappa, \mathrm{deg}(\sigma_i p_{i+l_{\mathrm{eq}}}) \leq 2 \kappa, i=1,\dots,l_{\mathrm{ineq}}, \\ & \quad \mathrm{deg}(\lambda_i p_i) \leq 2 \kappa, i=1,\dots,l_{\mathrm{eq}}. \end{split} \tag{5.12} \end{equation}\]

Bounding the Degree. The careful reader realizes that in (5.12) we have added constraints on the degrees of the polynomial multipliers \(\sigma_i\)’s and \(\lambda_i\)’s.6 Precisely, we choose an integer \(\kappa\), which we call the relaxation order, such that \[ 2 \kappa \geq \max \{ \mathrm{deg}(p_i(x)) \}_{i=0}^{l_{\mathrm{eq}} + l_{\mathrm{ineq}}}, \] and restrict the products \(\sigma_i p_{i+l_{\mathrm{eq}}}\)’s and \(\lambda_i p_i\)’s to have degrees at most \(2\kappa\). With this, we are explicitly limiting the degrees of the multipliers \(\sigma_i\)’s and \(\lambda_i\)’s, and hence asking the formulation (5.12) to search for a finite number of parameters (otherwise, if the degree of the multipliers is unbounded, then the number of parameters to be searched is infinite).

Convex Optimization. The last crucial (and surprising) observation is that the problem (5.12) is a convex optimization! This is due to the following three reasons

The polynomial multipliers \(\lambda_i\)’s can be fully parametrized by their coefficients, and these coefficients can be arbitrary vectors. Precisely, if \(\lambda(x)\) is a polynomial with degree up to \(d\), then \[ \lambda(x) = c^T [x]_d, \] where \([x]_d\) is the vector of monomials in \(x\) of degree up to \(d\), and \(c\) is the vector of coefficients.

The SOS multipliers \(\sigma_i\)’s can be fully parametrized by their coefficients, and these coefficients are positive semidefinite matrices, according to Lemma 5.1.

The equality constraint of decomposing \(p_0(x)\) as a sum of products in (5.12) therefore becomes a set of affine equality constraints on the parameters of \(\lambda_i\)’s and \(\sigma_i\)’s, by matching coefficients of the monomials on the left-hand size and the right-hand side.

Therefore, the problem (5.12) is a convex semidefinite program (SDP). There are multiple software packages, e.g., SOSTOOLS, YALMIP, SumOfSquares.py, that allow us to model our problem in the form of (5.12), convert the formulation into SDPs, and pass them to SDP solvers (such as MOSEK). We will see an example of this soon.

Extensions. I want to congratulate, and welcome you to enter the world of SOS relaxations! Like I said before, this is an active area of research and the framework I just introduced is just a tip of the iceberg. Therefore, before I end this tutorial, I want to point out several extensions of the SOS framework.

Necessary Condition. We have seen that a decomposition in the form of (5.12) is a sufficient condition to prove the nonnegativity of \(p_0(x)\). Is it also a necessary condition? That is, for any \(p_0(x)\) that is nonnegative on the set \(\mathcal{X}\), does it admit a decomposition in the form of (5.12)? In general, the answer is no, and there exist nonnegative polynomials that cannot be written in the form of SOS decompositions (e.g., the Motzkin’s polynomial). However, with certain assumptions on the set \(\mathcal{X}\), the decomposition (5.12) is also necessary for nonnegativity! A well-known assumption is called the Archimedean condition (which, roughly speaking, requires the set \(\mathcal{X}\) to be compact)). I suggest you to read (Blekherman, Parrilo, and Thomas 2012) for more details.

Global Polynomial Optimization. The SOS framework can be used for global optimization of polynomials in a straightforward way. Consider the polynomial optimization problem (POP) \[ \min_{x \in \mathcal{X}} p_0(x), \] where one seeks the global minimum of the polynomial \(p_0(x)\) on the set \(\mathcal{X}\). A POP is generally a nonconvex optimization problem, and it is difficult to obtain a globally optimal solution. However, with a slight change of perspective, we can write the problem above equivalently as \[\begin{equation} \begin{split} \max & \quad \gamma \\ \text{subject to} & \quad p_0(x) - \gamma \geq 0, \quad \forall x \in \mathcal{X}. \end{split} \tag{5.13} \end{equation}\] Basically I want to push the lower bound \(\gamma\) as high as possible. The constraint in (5.13) asks \(p_0(x) -\gamma\) to be nonnegative on \(\mathcal{X}\). With the SOS framework introduced above, we can naturally relax it to \[\begin{equation} \begin{split} \max & \quad \gamma \\ \text{subject to} & \quad p_0(x) - \gamma \quad \text{is SOS on} \quad \mathcal{X}, \end{split} \tag{5.14} \end{equation}\] where the “SOS on \(\mathcal{X}\)” constraint is exactly the problem (5.12). Therefore, we have relaxed the nonconvex optimization (5.13) into a convex problem (5.14)! Moreover, by increasing the relaxation order \(\kappa\), we obtain a sequence of lower bounds that asymptotically converge to the true global optimum of the nonconvex problem (5.13). This is called Lasserre’s hierarchy of moment-SOS relaxations, originally proposed by Lasserre in the seminal work (Jean B. Lasserre 2001). As this name suggests, the dual problem to the SOS relaxation (5.14) is called the moment relaxation. Lasserre’s hierarchy has recently gained a lot of attention due to the empirical observation in many engineering disciplines that the convergence to global optimum is finite, i.e., by solving the convex problem (5.14) at a finite relaxation order \(\kappa\), an exact global optimizer of the original nonconvex problem (5.13) can be extracted. For a pragmatic introduction to the moment relaxation, I suggest to read Section 2.2 of (H. Yang and Carlone 2022). For more applications of Lasserre’s hierarchy, please refer to (Jean Bernard Lasserre 2009).

Scalability. I have to warn you that there is no free lunch. The fact that so many challenging problems can be relaxed or restated as convex optimization problems should send you an alert. Does this mean that we can use convex optimization to solve all the challenging problems? Well, although we hope this is the case, in practice we are limited by the computational resources. The caveat is that the problem (5.12) and (5.14), despite being convex, grows very large as the dimension \(n\) and relaxation order \(\kappa\) increases. Another way of saying this is that, we seek to solve small-to-medium scale nonconvex problems with large-scale convex problems. Unfortunately, today’s SDP solver cannot solve all the problems we formulate, and hence a major research direction in the mathematical optimization community is to develop SDP solvers that are more scalable. You can read (H. Yang et al. 2022) and references therein for more details.

Non-SOS Certificates. Nobody is preventing us to use a different choice of nonnegative polynomial multipliers (other than SOS multipliers) in (5.11). For example, one can use a decomposition as the sum of nonnegative circuit polynomials (Wang 2022) or signomials (Murray, Chandrasekaran, and Wierman 2021). However, to the best of my knowledge, non-SOS certificates are far less popular than SOS certificates.

There are many other extensions to the SOS framework, and a complete enumeration is beyond the scope of this lecture notes. For the connection between SOS and theoretical computer science, you can see the lecture notes by Boaz Barak and David Steurer. There are also more recent monographs about SOS, for example (Magron and Wang 2023) and (Nie 2023). I plan to introduce these in more details in an upcoming graduate-level class at Harvard.

That was a long detour from Lyapunov analysis! The SOS machinery will come back later when we study multiple other topics in optimal control and estimation. But now let us show how to tackle the problem of computing Lyapunov certificates using the SOS machinery.

According to Theorem 5.2, given a set \(\mathcal{X}\) that contains an equilibrium point \(x^\star\), if we can find a Lyapunov function \(V(x)\) such that \(V(x)\) is positive definite on \(\mathcal{X}\) and \(\dot{V}(x)\) is negative definite on \(\mathcal{X}\), then the equilibrium point \(x^\star\) is locally asymptotically stable. With the SOS machinery, we can search for a \(V(x)\) that is a polynomial as \[\begin{align} \text{find} & \quad V(x) \\ \text{subject to} & \quad V(x) - \epsilon_1 \Vert x - x^\star \Vert^2 \quad \text{is SOS on} \quad \mathcal{X} \\ & \quad - \epsilon_2 \Vert x - x^\star \Vert^2 - \frac{\partial V(x)}{\partial x} f(x) \quad \text{is SOS on} \quad \mathcal{X} \\ & \quad V(x^\star) = 0, \end{align}\] where \(\epsilon_1, \epsilon_2 > 0\) are (small) positive constants. This is a convex optimization problem, just like (5.12) (try to convince yourself my claim is true). Similarly, we can choose a relaxation order \(\kappa\) and solve the above problem. If a solution exists, then we find a valid Lyapunov certificate.

Let us apply it to the simple pendulum to synthesize local stability certificates.

Example 5.6 (Computing Lyapunov Local Stability Certificate for the Simple Pendulum with Convex Optimization) The SOS framework works with polynomials, so let us first write the pendulum dynamics in polynomial form via a change of coordinate \(x = [\mathfrak{s}, \mathfrak{c}, \dot{\theta}]^T\) with \(\mathfrak{s} = \sin \theta\), \(\mathfrak{c} = \cos\theta\): \[ \begin{cases} \dot{\mathfrak{s}} = \mathfrak{c} \dot{\theta} \\ \dot{\mathfrak{c}} = -\mathfrak{s} \dot{\theta} \\ \ddot{\theta} = - \frac{1}{ml^2}(b \dot{\theta} + mgl \mathfrak{s}) \end{cases}. \] We will use \(m = 1, l = 1, b=0.1\) for our numerical experiment.

We want to find a local Lyapunov certificate in the compact set \[\begin{equation} \theta \in \left[-\arccos \frac{3}{4}, \arccos \frac{3}{4} \right], \quad \dot{\theta} \in \left[- \frac{\pi}{2}, \frac{\pi}{2} \right]. \tag{5.15} \end{equation}\]

In the new coordinates \(x\), this is equivalent to the semialgebraic set \[ \mathcal{X} = \left\{ x \in \mathbb{R}^{3} \mid \mathfrak{s}^2 + \mathfrak{c}^2 = 1, \dot{\theta}^2 \leq \frac{\pi^2}{4}, \mathfrak{c} \geq \frac{3}{4} \right\}. \]

Denoting the bottomright equilibrium point as \(x_e = [0,1,0]^T\), and with \(\epsilon_1,\epsilon_2 > 0\) two positive constants, we can seek a Lyapunov function \(V(x)\) that satisfies the following conditions \[\begin{align} V(x) \geq \epsilon_1 (x - x_e)^T (x - x_e), \quad \forall x \in \mathcal{X} \tag{5.16}\\ \dot{V}(x) = \frac{\partial V}{\partial x} \dot{x} \leq - \epsilon_2 (x - x_e)^T (x - x_e), \quad \forall x \in \mathcal{X} \tag{5.17}\\ V(x_e) = 0, \quad \dot{V}(x_e) = 0 \tag{5.18} \end{align}\] where (5.16) ensures \(V(x)\) is positive definite, (5.17) ensures \(\dot{V}(x)\) is negative definite, and (5.18) ensures \(V(x),\dot{V}(x)\) vanish at the equilibrium point.

To leverage the power of convex optimization, we can relax the positivity constraints as SOS constraints \[\begin{align} V(x) - \epsilon_1 (x - x_e)^T (x - x_e) \quad \text{is SOS on} \quad \mathcal{X} \\ - \epsilon_2 (x - x_e)^T (x - x_e) - \frac{\partial V}{\partial x} \dot{x}\quad \text{is SOS on} \quad \mathcal{X} \\ V(x_e) = 0, \quad \dot{V}(x_e) = 0. \end{align}\]

If we limit the degree of \(V\) to \(2\), choose the relaxation order \(\kappa = 2\), and \(\epsilon_1 = \epsilon_2 = 0.01\), we obtain a solution \[ V(x) = 2.7982 \mathfrak{s}^2 + 0.086248 \mathfrak{s} \dot{\theta} + 2.4548\mathfrak{c}^2 + 0.88117 \dot{\theta}^2 - 16.6277 \mathfrak{c} + 14.1728 \] with the time derivative \[ \dot{V}(x) = 0.68675 \mathfrak{s} \mathfrak{c} \dot{\theta} + 0.086248* \mathfrak{c} \dot{\theta}^2 - 0.84523 \mathfrak{s}^2 - 0.65191 \mathfrak{s} \dot{\theta} - 0.17623 \dot{\theta}^2. \]

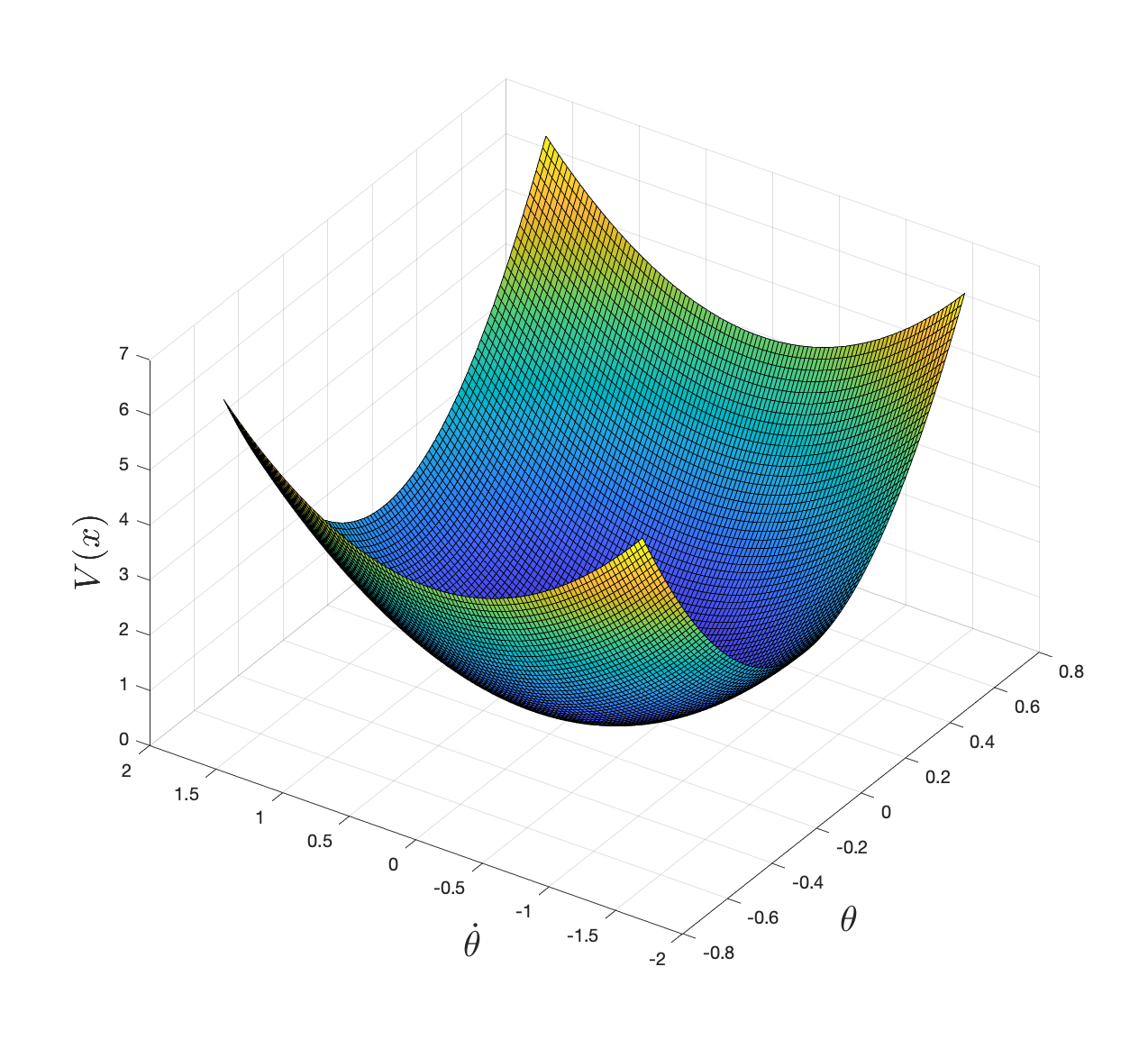

Plotting \(V(x)\) in the constraint set (5.15) using \((\theta, \dot{\theta})\) coordinates, we get

Figure 5.2: Lyapunov local stability certificate computed via convex optimization.

and verify that \(V(x)\) is locally positive definite.

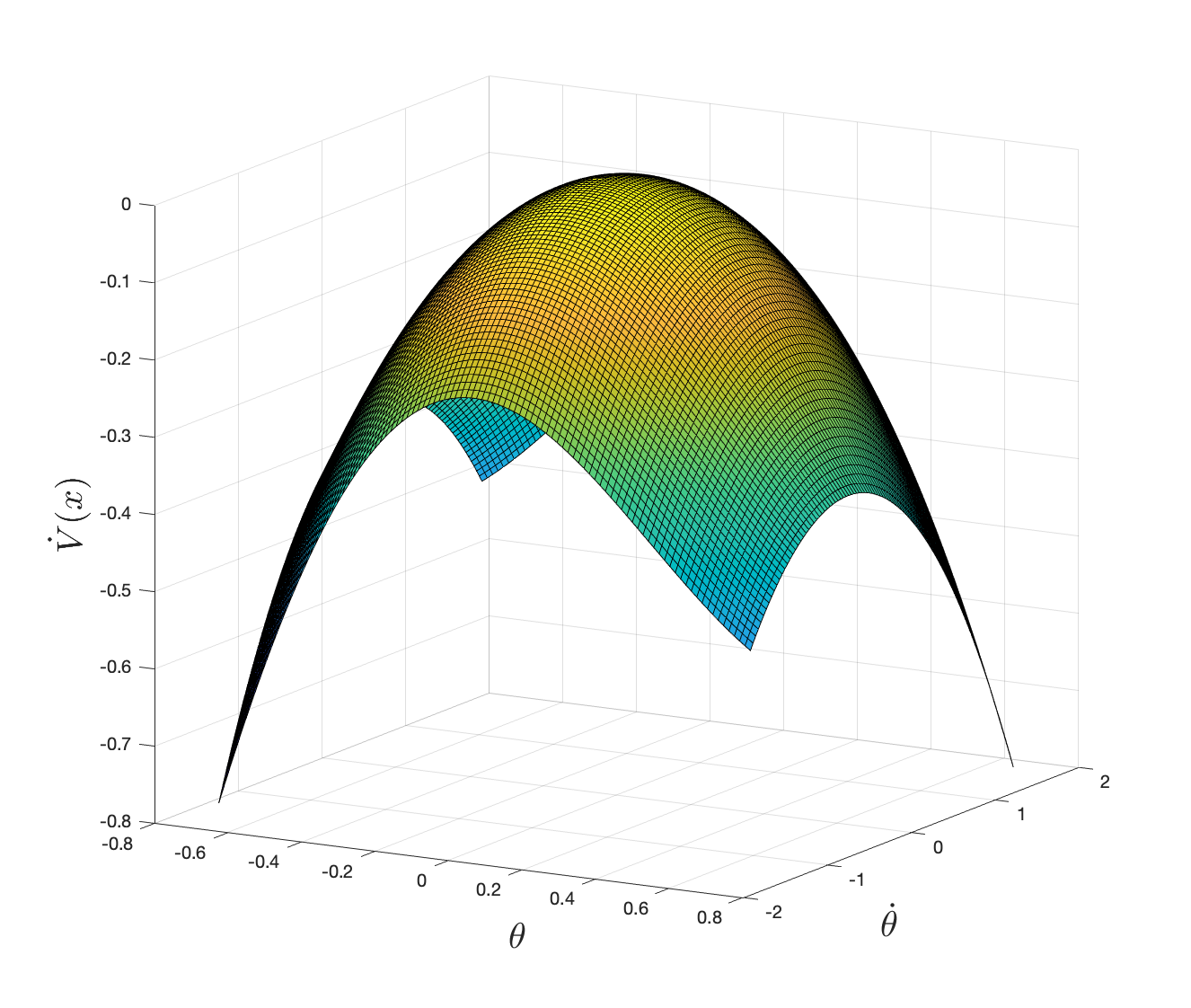

Plotting \(\dot{V}(x)\) in the constraint set (5.15) using \((\theta, \dot{\theta})\) coordinates, we get

Figure 5.3: Derivative of the Lyapunov local stability certificate computed via convex optimization.

and verify that \(\dot{V}(x)\) is locally negative definite.

You should try the code for this example here.

5.2 Controlled Systems

We can generalize the notion of a Lyapunov function for an autonomous system to the notion of a Control Lyapunov Function (CLF) for a controlled system (Sontag 1983).

Consider a controlled system \[\begin{equation} \dot{x} = f(x,u) \tag{5.19} \end{equation}\] where \(x \in \mathbb{X} \subseteq \mathbb{R}^n\) the state, \(u \in \mathbb{U} \subseteq \mathbb{R}^m\) the control, and \(f\) is continuously differentiable. Let \(x = 0, u=0\) be an equilibrium point, i.e., \(f(0,0) = 0\). The existence of a CLF guarantees that we can design a controller to stabilize the equilibrium point.

Theorem 5.6 (Control Lyapunov Function) Let \(V(x): \mathbb{X} \rightarrow \mathbb{R}\) be a continuously differentiable positive definite function, i.e., \(V(0) = 0\) and \(V(x) > 0, \forall x \in \mathbb{X} \backslash \{ 0 \}\). If there exists \(\rho > 0\) such that \(\Omega := \{ x \in \mathbb{X} \mid V(x) \leq \rho \}\) is bounded and \[\begin{equation} \min_{u \in \mathbb{U}} \dot{V}(x) = \frac{\partial V}{\partial x} f(x,u) < 0, \quad \forall x \in \Omega \backslash \{ 0 \}, \tag{5.20} \end{equation}\] then \(V(x)\) is called a control Lyapunov function.

In this case, if the system starts within the set \(\Omega\), then there exists a controller \(u(t)\) that drives the system towards the equilibrium point \(0\).

In Theorem 5.6, it is clear that \(Omega\) is a control invariant set. In fact, \(Omega\) is an inner approximation of the region of attraction to the equilibrium point \(0\).

Deploying a CLF. Now we observe that, when a CLF \(V(x)\) is given, it can help us design an asymptotically stablizing controller. Consider a special instance of the controlled system (5.19) that is control-affine \[\begin{equation} \dot{x} = f_1(x) + f_2(x)u. \tag{5.21} \end{equation}\] Clearly, the dynamics (5.21) is affine in the control \(u\). Almost all robotics systems are control affine, so (5.21) is quite general. Suppose we are given an arbitrary controller \(u_d(t)\), which may or may not stabilize the system towards the equilibrium point (e.g., \(u_d(t)\) may be obtained from neural networks). We can use the CLF \(V(x)\) to correct \(u_d(t)\) so that we always obtain a stabilizing feedback controller \[\begin{equation} \begin{split} u(x(t)) = \arg\min_{u \in \mathbb{U}} & \quad \Vert u - u_d(t) \Vert^2 \\ \text{subject to} & \quad \dot{V}(x) = \frac{\partial V}{\partial x} (f_1(x) + f_2(x) u) < 0. \end{split} \tag{5.22} \end{equation}\] Note that the optimization (5.22) is actually a convex optimization problem! Since we are minimizing over \(u\) (the state \(x(t)\) is given), the objective in (5.22) is quadratic in \(u\) and the constraint in (5.22) is linear in \(u\), implying (5.22) is a quadratic optimization problem. The CLF condition (5.20) ensures that (5.22) is always feasible. Intuitively, optimization (5.22) seeks to find the feedback controller that (a) minimally modifies the given arbitrary controller \(u_d(t)\), and (b) guarantees stabilization towards the equilibrium.

Verification and Synthesis of a CLF. When a CLF is given, deploying it to compute a stablizing controller is easy. However, verifying if a candidate function is indeed a control Lyapunov function is a highly nontrivial task. In fact, it is an active area of research. The interested reader can refer to (Kang et al. 2023) and (Dai and Permenter 2023).

5.3 Non-autonomous Systems

Lemma 5.2 (Barbalat's Lemma) Let \(f(t)\) be differentiable, if

\(\lim_{t \rightarrow \infty} f(t)\) is finite, and

\(\dot{f}(t)\) is uniformly continuous,7

then \[ \lim_{t \rightarrow \infty} \dot{f}(t) = 0. \]

Theorem 5.7 (Barbalat's Stability Certificate) If a scalar function \(V(x,t)\) satisfies

\(V(x,t)\) is lower bounded,

\(\dot{V}(x,t)\) is negative semidefinite

\(\dot{V}(x,t)\) is uniformly continuous

then \(\dot{V}(x,t) \rightarrow 0\) as \(t \rightarrow \infty\).

Proof. \(V(x,t)\) is lower bounded and \(\dot{V}\) is negative semidefinite implies the limit of \(V\) as \(t \rightarrow \infty\) is finite (note that \(V(x,t) \leq V(x(0),0)\)). Then the theorem clearly follows from Barbalat’s Lemma 5.2.

References

In fact, many of the recent works verify “neural” Lyapunov certificates (and other types of certificates) using this idea, see for example (Dawson, Gao, and Fan 2023).↩︎

The degree of a monomial is the sum of its exponents. For example, \(\mathrm{deg}(x_1 x_2^4 x_3^2) = 1 + 4 + 2 = 7\). The degree of a polynomial is the maximum degree of its monomials. For example, the polynomial \(p(x) = 1 + x_2 + x_1^2 x_2^3\) has three monomials with degrees \(0\), \(1\), and \(5\), respectively. Therefore, \(\mathrm{deg}(p) = 5\).↩︎

A sufficient condition for this to hold is that \(\ddot{f}\) exists and is bounded.↩︎